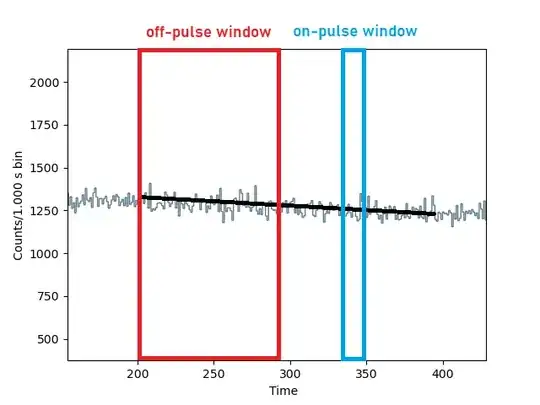

Consider the following problem. We have a time series of counts (poisson-distributed) data. In this time series we can select an off-pulse window in which only background is present and a subsequent on-pulse window in which a source signal may or may not be present.

I want to estimate a mean value and error for the background counts in the on-pulse window $b = b \pm \sigma$, given the off pulse observations and the assumptions that the poisson rate can change linearly $\sim mt + q$.

The actual situation is depicted in the following picture. A paper I'm studying claims that $b$ should be normally distributed with good approximation, even when $b$ is small.

This looks as a fairly straightforward problem which I don't how know to approach. If data were gaussian distributed and error bars were given, a linear regression would do the trick. In particular, i would estimate $m \pm \sigma_m$ and $q \pm \sigma_q$ and propagate their errors.

Where should I look?

One remark: i'm particularly interested in the case in which on-pulse window is small (few counts).