This is something that I had dealt with in my MSc Economics many years ago, passed the exams with flying colours, yet when I thought about it in more depth today, I was somewhat puzzled. This could perhaps be because it's been a couple of years since I last covered the topic, or maybe it is due to the fact that I had only learnt the theory and never dealt with a practical example; regardless some intuitive explanation, strengthened with a mathematical proof would be very much appreciated.

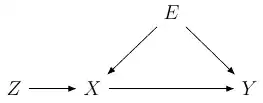

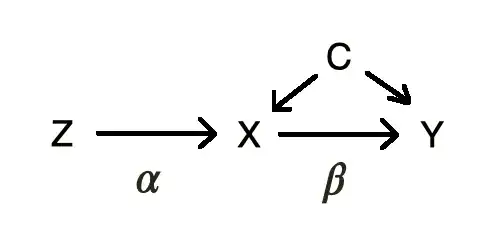

The general idea is that for $y=\beta'x+\varepsilon$, the exogeneity assumption must hold -i.e. $\mathbb{E}\{x(y-\beta'x)\}=0$. When this assumption is violated -i.e. $\mathbb{E}\{x(y-\beta'x)\}\neq0$, we find an instruments $z$, such that $\mathbb{E}\{z(y-\beta'x)\}=0$, while $\mathbb{E}\{xz\}\neq 0$. In other words, $x$ and $z$ are correlated, but while $x$ is correlated with $\varepsilon$, $z$ is not! I cannot see how this could be the case. Or does the assumption has more to do with "perfect" collinearity? As in $z$ would still be correlated with $\varepsilon$, but not to the same extent that $x$ is? Thank you!