I have a large sample (a vector) $\mathbf{x}$ from a random variable $X\sim N(\mu,\sigma^2)$. The variance $\sigma^2$ is known, but the expectation $\mu$ is unknown. I would like to test the null hypothesis $H_0\colon \ \mu=\mu_0$ against the alternative $H_1\colon \ \mu=\mu_1$ using a likelihood ratio (LR) test. The test statistic is $$ \text{LR}(\mu_0,\mu_1)=-2\ln\frac{L(\mathbf{x}\mid\mu_0,\sigma^2)}{\max\{\ L(\mathbf{x}\mid\mu_0,\sigma^2), \ L(\mathbf{x}\mid\mu_1,\sigma^2)\ \}}. $$ Question: What is its asymptotic distribution under the null?

-

Related question: ["Failing to obtain $\chi^2(1)$ asymptotic distribution under $H_0$ in a likelihood ratio test"](https://stats.stackexchange.com/questions/506763). – Richard Hardy Jan 27 '21 at 14:36

-

Related question: ["Testing a nonstandard hypothesis: constructing test statistic, finding rejection region and obtaining p-value"](https://stats.stackexchange.com/questions/506560). – Richard Hardy Jan 27 '21 at 14:37

-

1Related question: ["What are the regularity conditions for Likelihood Ratio test"](https://stats.stackexchange.com/questions/101520). – Richard Hardy Jan 27 '21 at 14:37

2 Answers

As you increase the sample size under the null, you'll simply keep pushing all the probability mass on to a log likelihood ratio of zero as the probability of $L(\mathbf{x}\mid\mu_1,\sigma^2)$ relative to $L(\mathbf{x}\mid\mu_0,\sigma^2)$ continues to fall. So for a given sample size $n$, tests of size greater than $\Pr_n\left(\bar X=\frac{1}{2}\right)=1 - \Phi\left(\frac{\sqrt n}{2}\right)$ won't exist, & the p-value distribution will be non-uniform.

The usual LR statistic for point hypotheses would be

$$ \text{LR}(\mu_0,\mu_1)=-2\ln\frac{L(\mathbf{x}\mid\mu_0,\sigma^2)}{L(\mathbf{x}\mid\mu_1,\sigma^2)}. $$

allowing the construction of tests of any size, & providing a uniformly distributed p-value. It doesn't have an asymptotic distribution under the null, becoming stochastically smaller as sample size increases; which is to be expected—as Cox & Hinkley (1979) put it, this reflects the fact that "for separate hypotheses consistent discrimination is possible". You don't need asymptotics here anyway: you can always calculate or simulate the exact distribution of a test statistic under a point null for any sample size—& of course in this case it's a monotonic function of the sample mean, which has a familiar distribution.

Cox & Hinkley (1979), Theoretical Statistics, Ch. 9, "Asymptotic Theory"

- 27,560

- 8

- 81

- 248

-

1Thank you for your answer! Could you explain what you mean by *the probability of $L(\mathbf{x}\mid\mu_1,\sigma^2)$ relative to $L(\mathbf{x}\mid\mu_1,\sigma^2)$*? Could you also explain why the LR statistic is not the one I wrote? I based mine on some general textbook material on LR testing. It has a familiar expression of a constrained maximum in the numerator and an unconstrained maximum in the denominator (and reminds e.g. of an $F$-test that I know from regression context). – Richard Hardy Mar 12 '21 at 14:18

-

1Also, why is there a problem with the asymptotic distribution in this case? When talking about asymptotic distributions, we typically scale the statistic by $\sqrt{n}$ so that it converges to a random variable rather than a constant. Is this case fundamentally different? – Richard Hardy Mar 12 '21 at 14:22

-

(1) Sorry - fixed a typo. (2) The constrained over unconstrained likelihood formulation of the LR test arises from its equivalence to the null over alternative likelihood formulation when the null is of a lower dimension than the alternative - a point on a line, a line in a plane, &c. (3) That depends: people do talk loosely about, say, the asymptotic distribution of the sample mean, but I read you literally here - after all, the (unscaled) log likelihood ratio famously does have an asymptotic distribution under some conditions (including in particular that the unconstrained ... – Scortchi - Reinstate Monica Mar 12 '21 at 15:02

-

... maximum-likelihood estimator can get arbitrarily close to the true parameter values, which it can't in this example.) – Scortchi - Reinstate Monica Mar 12 '21 at 15:04

-

Could you perhaps recommend a treatment of LR testing that is relatively nontechnical but has good intuition? – Richard Hardy Mar 12 '21 at 15:42

-

Let us [continue this discussion in chat](https://chat.stackexchange.com/rooms/120800/discussion-between-scortchi-reinstate-monica-and-richard-hardy). – Scortchi - Reinstate Monica Mar 12 '21 at 20:24

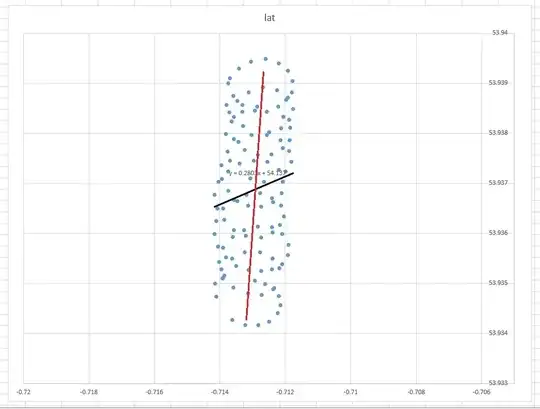

I have simulated it below in R for a single value of $\sigma^2$ but am still lacking insight. For the particular value of $\sigma^2$, the shape reminds me a bit of $\chi^2(1)$ distribution. However, this varies with $\sigma^2$.

n=3e3 # sample size

sigma=10*sqrt(n) # standard deviation of X

m=3e3 # number of simulation runs

logL0s=logL1s=logLRs=rep(NA,m)

for(i in 1:m){ # Runs some

set.seed(i); x=rnorm(n,mean=0,sd=sigma)

logL0=sum(log( dnorm(x,mean=0,sd=sigma) ))

logL1=sum(log( dnorm(x,mean=1,sd=sigma) ))

logLR =-2*(logL0-max(logL0,logL1)) # the -2*ln(LR) statistic from this simulation run

logL0s[i]=logL0; logL1s[i]=logL1; logLRs[i]=logLR

}

# Plots illustrating the sampling distribution of -2*log(LR) statistics for a particular value of sigma

par(mfrow=c(2,2),mar=c(2,2,2,1))

plot(logLRs,main="Scatterplot of -2ln(LR) across simulation runs")

plot(NA)

plot(density(logLRs),main="Density of -2ln(LR)")

chisq.quantiles=qchisq(p=seq(from=0.001,to=0.999,by=0.001),df=1)

chisq.density=dchisq(x=chisq.quantiles,df=1)

lines(y=chisq.density,x=chisq.quantiles,col="blue",lty="dashed") # Chi^2(1) overlay

br=24

hist(logLRs,breaks=br,main="Histogram of -2ln(LR)")

lines(y=chisq.density*m/br,x=chisq.quantiles,col="blue",lty="dashed") # Chi^2(1) overlay

par(mfrow=c(1,1))

- 54,375

- 10

- 95

- 219

-

You've set the sample size to `m`, not `n`. And I'd say a plot of the empirical distribution function would be more informative than the ones shown: `plot(ecdf(logLRs), verticals = TRUE)`. – Scortchi - Reinstate Monica Mar 11 '21 at 09:07