I am currently studying discriminant analysis. I have encountered the phrases "likelihood-based LDA" (with some prior) and "likelihood-based QDA" (with some prior). I know what LDA (with some prior) and QDA (with some prior) are, and I have implemented them in R, but I don't understand what "likelihood-based LDA" and "likelihood-based QDA" are, how they relate to LDA and QDA (or whether they are the same concept), and how they are implemented in R (compared to the usual LDA and QDA).

When I implemented LDA and QDA (with uniform priors over 3 classes) in R, I did the following:

lda.0 = lda( x ~ a + b + c + d + e + f, data = data, prior = c(1/3,1/3,1/3) )

preds.0 = predict( lda.0 )$class

xtabs( ~ preds.0 + data$x )

qda.0 = qda( x ~ a + b + c + d + e + f, data = data, prior = c(1/3,1/3,1/3) )

preds.0 = predict( qda.0 )$class

xtabs( ~ preds.0 + data$x )

The lda() and qda() functions are from the MASS package https://www.rdocumentation.org/packages/MASS/versions/7.3-53/topics/lda https://www.rdocumentation.org/packages/MASS/versions/7.3-53/topics/qda

What is "likelihood-based LDA" and "likelihood-based QDA", how do they relate to LDA and QDA, and how they are implemented in R (compared to the implementation above)? Links to good resources would also be appreciated.

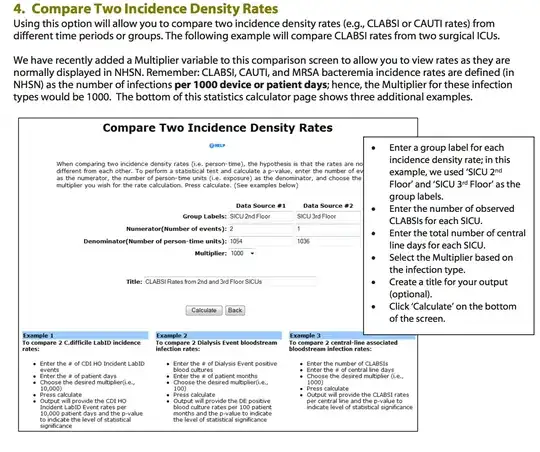

This exercise statement is why I'm asking this question:

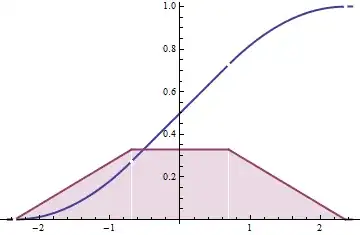

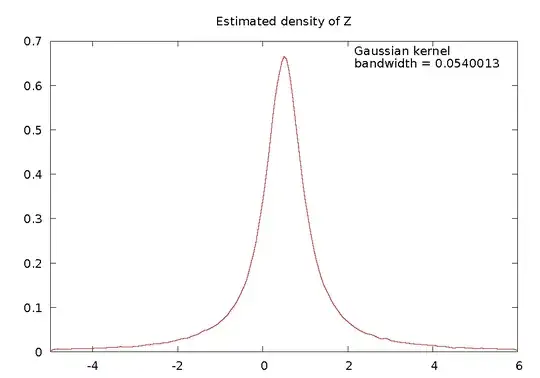

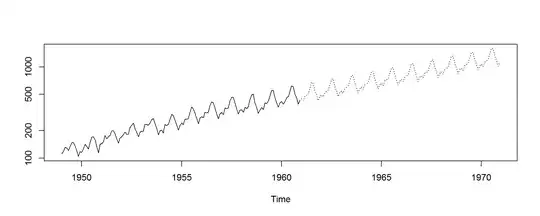

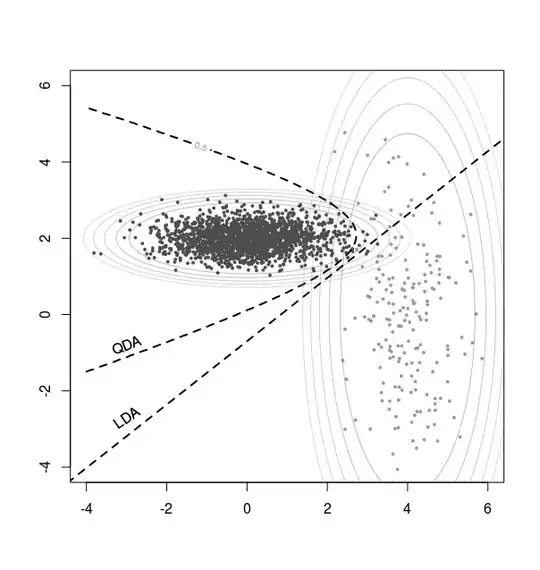

And here is the provided solution (no code provided):

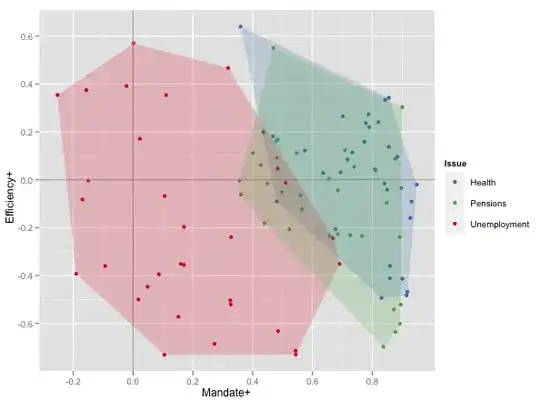

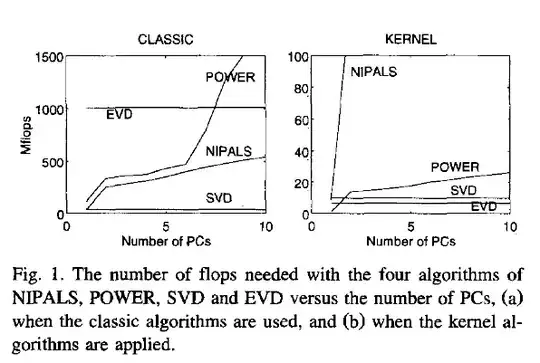

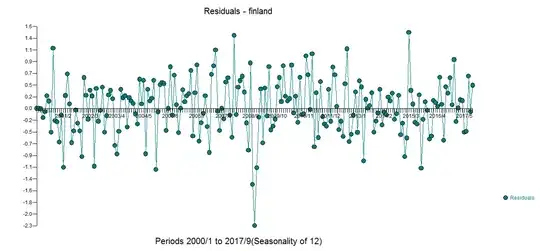

Here are all of the relevant textbook mentions of "likelihood" for this context: