A few suggestions with respect to working with crime data.

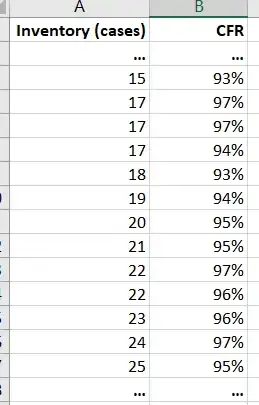

First, your plot is investigating the relationship between unemployment rates and total crime counts across many geographic regions. It is unclear what a "region" represents, but it appears they are rather large aerial units (e.g., counties, states, countries, etc.). Some regions experience nearly 40,000 – 60,000 total occurrences of theft/larceny in a given time period. Large aggregate counts suggests you're sampling crime occurrences by U.S. State in one year. If this is so, I would normalize the data. Expressing your outcome $y$ as a crime rate per 100,000 inhabitants is appropriate. Imagine trying to compare total reports of larceny in the State of New York with those reported in Louisiana. Before looking at the data, you might suspect a greater number of larcenies reported in the State of New York, assuming a large number of larcenies occurred within the five boroughs. Once you normalize by the state population, for example, you should find per capita thefts in Louisiana far exceeding per capita thefts in the State of New York. It's a twofold difference. Though your plot's title indicates you're working with per capita rates, the actual numerical quantities plotted suggest otherwise.

Second, I suspect in your first plot each point represents a region's total number of crime occurrences. Total crime occurrences should equal the sum of all incidents within a particular region within a given period of time. This amounts to one data point per jurisdiction. I only mention this because it appears you plotted three separate points for each jurisdiction—one for each crime type. The disaggregated plots suggest you have approximately 22 – 25 independent pieces of information, not 60+ as your first model suggests.

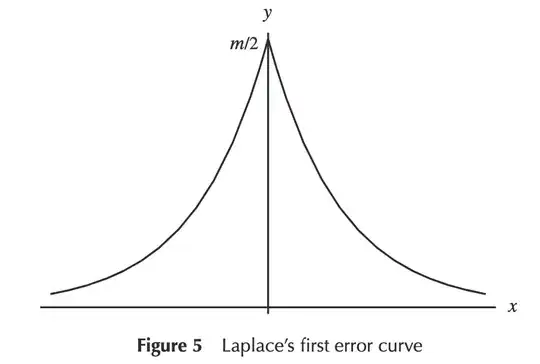

Third, crime counts often exhibit a discernible right skew. For example, theft rates in the District of Columbia will be far out in the right tail of a distribution. Try winnowing your $x$-axis to regions with unemployment rates between 3.75% – 4.25% and note the wide variation within this interval. The variability is particularly pronounced across regions. Differences in "reporting practices" might explain some of this variation across jurisdictions.

As for your interpretation of the first plot, which I assume is your composite crime outcome (i.e., all crimes merged), you are making all the correct observations, but without much substance.

There is no apparent relationship between the two variables.

Correct.

Your bivariate model suggests unemployment does not effect total incidents of crime. However, I wouldn't derive too much causal value from this model. Note that all of your variation is between regions (e.g., states). Try reestimating your base model but now with each region's per capita value. This is very useful when comparing jurisdictions with widely different population sizes.

Your bullet points simply regurgitate your summary output. For example, you note the $F$-statistic is low. This is correct. But what does it mean? For starters, it is a measure of overall significance. It tells you, in simple terms, whether your linear regression model provides a better fit than a model including no independent variables. The $p$-value associated with the test statistic supports a null hypothesis which states that your model which includes the unemployment rate as a predictor does not fit better than one that excludes it (i.e., 'intercept-only' model). I don't want to get too caught up in the weeds here, but the point is, don't simply say a statistic is low without any further explication.

What else can I add to my interpretation? Also, what does it mean if the intercept Pr(>|t|) is < 0.05?

The intercept has little interpretive value. It is the average number of total crime occurrences in a jurisdiction with an unemployment rate at 0%. Is there a region where the percentage of unemployed workers in the total labor force is exactly 0? In sum, the intercept is usually not of substantive interest.

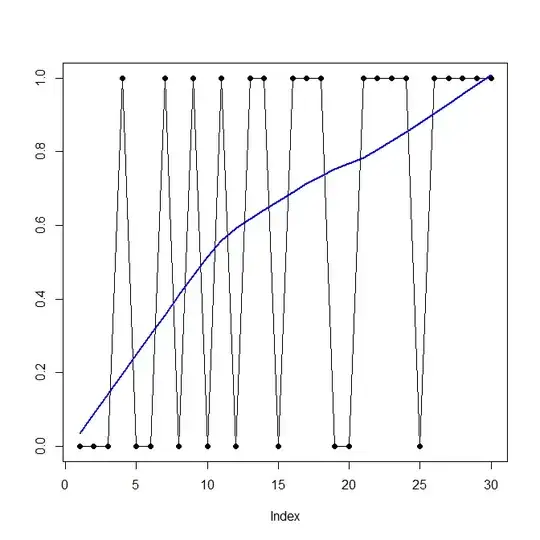

As for your second output, your faceted plot and model summary do not agree. Faceting your data is looking at the relationship between unemployment and crime across different subsets of your data.

Plotting a faceted scatter plot and linear regression for each crime type proved more difficult.

Why did it prove more difficult? It appears you faceted by crime type (i.e., facet_wrap(~ crime)), which produces a bivariate plot of crime ~ unemployment for each crime type. In other words, as you move from left to right, you're running a linear model on different subsets of your data.

Again, the plots do not agree with your output. In fact, your second model is misspecified. You're regressing total crime on the unemployment rate and all sub-categories of crime and expecting software to return a coefficient for each crime type. Unless I am mistaken, the sum of each subtype on the right-hand side is equal to your outcome. Let $Crime^{t}_i$ equal the total number of incidents reported in a particular jurisdiction $i$ in one year; the $t$ superscript denotes the composite sum of all disaggregated crime metrics. Here is your equation expressed mathematically:

$$

Crime^{t}_i = \alpha + \gamma UR_i + Sub^1_i + Sub^2_i + Sub^3_i + \epsilon_i

$$

where $UR_i$ is the unemployment rate in region $i$; it is the primary independent variable of interest. Here, each sub-category of crime, which comprises total crime, is expressed on the right-hand side of the equation. By definition, $Crime^{t} = Sub^1 + Sub^2 + Sub^3$ for any $i$. If you know all sub-categories of total crime then you can perfectly predict total crime. What do you hope to gain from this model? Your crime metrics are your outcomes of interest.

Where is Anti-Social behaviour? It is in the data frame df I specified in the code but not in the output.

Your model must drop one of your disaggregated crime metrics. R orders the factor levels in abecedarian sequence, unless you tell it otherwise, and so it drops the first category which starts with the letter A.

However, why is t value negative? That should suggest no relationship, but it clashes with Pr(>|t|)

The $p$-value is a probability value and by the axioms of probability it is bounded between 0 and 1. The $t$-value, on the other hand, is unbounded. They're different but also inextricably linked. Their signs do not have to agree.

The p value is < 0.05, which suggests significancy, but which crime type does it suggest significancy with? Is it theft?

As a recommendation, I would estimate separate linear models with each crime outcome on the left-hand side. That amounts to four separate calls in R using your four different outcomes: (1) total crime rate, (2) anti-social behavior offense rate, (3) larceny rate, and (4) violence and sexual assault offense rate. Again the total crime rate is the sum of all your disaggregated crime metrics; it is a composite measure of all crime in a particular region. I think you may have estimated your linear model on a data frame where each outcome for each region is stacked. This is understandable in settings where you transform your data into long format to facilitate faceting. If you want separate summary output for each outcome then before running lm() you must create separate columns for each outcome. Once it is in this format, then you can feed each crime outcome to the left-hand side of a standard linear model (i.e., lm(crime ~ unemployment)).

As a final word, anti-social behavior is very broad and is likely to vary considerably by jurisdiction. Take good measure to note any obvious differences in reporting practices across agencies. Also, why combine "violent acts" and "sexual offenses"? Though the nexus between violence and sexual predation is obvious, the term violence is very broad, encompassing crimes such as robbery and other acts of felonious assault. In some cases, multiple offenses may be associated with one incident. For example, possessions forcibly removed from a person is, in some circumstances, a violent theft (e.g., assault + theft). Will you disambiguate between multiple offenses in your crime rate calculations or should only one violent offense take precedence? Some jurisdictions may only report the top charge, which invariably gives more weight to "serious" (i.e., violent) offenses. I only note such concerns because they often arise when working with crime data.

I hope this information helps!