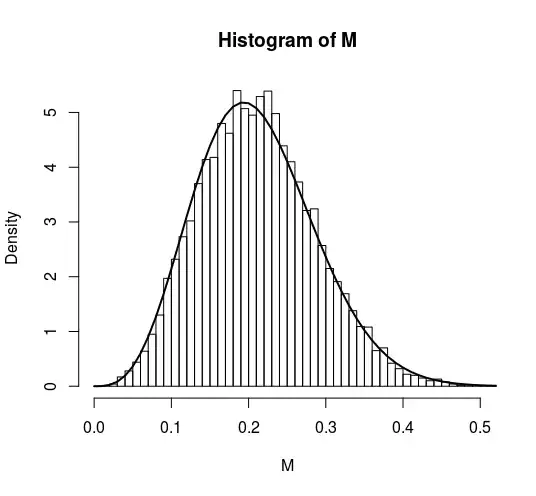

Let $U_1,\dots,U_n$ be i.i.d, uniform on the euclidean sphere on $\mathbb{R}^d$ that we denote $S^{d-1}$. I am searching for the law of $$M=\left\|\frac{1}{n}\sum_{i=1}^n U_i \right\|.$$

Attempts:

I don't know if it is possible to determine the law exactly, I need a lower bound on $M$ so I tried U-Statistics deviation bounds but the bound I obtained is not sufficient, I want to be able to say that with some small probability, $M$ is greater than $\sqrt{\lambda/n}$ for some $\lambda\le n$ (with U-stats I am limited to $\lambda \le 1$).

U-Stat approach: if we take the square, we obtain $$M=\left\|\frac{1}{n}\sum_{i=1}^n U_i \right\|^2 = \frac{1}{n} +\frac{1}{n^2}\sum_{i \neq j} \langle U_i, U_j\rangle .$$ For one individual term $\langle U_i, U_j\rangle$, we know what is the law (related to Distribution of scalar products of two random unit vectors in $D$ dimensions and https://math.stackexchange.com/questions/2977867/x-coordinate-distribution-on-the-n-sphere) but these terms are not independent hence taking the sum is not easy.

Another possibility is to write that $$M=\left\|\frac{1}{n}\sum_{i=1}^n U_i \right\|^2 = \frac{1}{n^2} +\frac{2}{n^2}\langle U_1, \sum_{i=2}^n U_i\rangle + \left\|\frac{1}{n}\sum_{i=2}^n U_i \right\|^2 .$$ then, by independence of the $U_i's$, we have $$\mathbb{P}\left(\left\|\frac{1}{n}\sum_{i=1}^n U_i \right\|^2 \le x\right)=\mathbb{E}\left[ \mathbb{P}\left(\frac{1}{n^2} +\frac{2}{n^2}\langle U_1, \sum_{i=2}^n U_i\rangle + \left\|\frac{1}{n}\sum_{i=2}^n U_i \right\|^2 \le x\big| U_2,\dots, U_n\right)\right] $$ and we know that the law of $(1+\langle U_1, \sum_{i=2}^n U_i\rangle)/2$ is a $Beta(d/2, d/2)$ distribution (conditionally on $U_2,\dots,U_n$) hence, $$\mathbb{P}\left(\left\|\frac{1}{n}\sum_{i=1}^n U_i \right\|^2 \le x\right)=\mathbb{E}\left[ F_{Beta(d/2,d/2)}\left( \frac{n^2x}{4}+\frac{1}{4}- \frac{1}{4}\left\|\sum_{i=2}^n U_i\right\|^2 \right)\right] $$ and this, I want to find an upper bound but I don't think I will succeed with this line of thought.