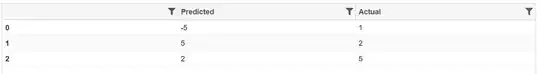

Below test data:

predictions = [-5 , 5 , 2]

actual = [1 , 2 , 5]

predictions[:3]

dataset = pd.DataFrame()

dataset['Predicted'] = predictions

dataset['Actual'] = actual

qgrid.show_grid(dataset,show_toolbar=True)

renders:

I'm attempting to find the optimal error threshold which will provide the highest accuracy of predictions.

For example to measure the percent change in values I use :

dataset['percent-change'] = ((dataset['Actual'] - dataset['Predicted']) / dataset['Predicted'] * 100)

dataset

which renders:

To measure the predictions and different thresholds I use:

a= []

p = []

number_correct_at_threshold = []

number_incorrect_at_threshold = []

allowed_percent_error = 0

step_size = 100

for r in range(0 , 3):

dataset['is-prediction-correct'] = dataset['percent-change'] <= allowed_percent_error

dataset[ 'is-prediction-correct'] = dataset['is-prediction-correct'] * 1

number_correct_at_threshold.append(len(dataset[dataset['is-prediction-correct'] ==1]))

number_incorrect_at_threshold.append(len(dataset[dataset['is-prediction-correct'] ==0]))

dataset['ts'] = dataset.index

accuracy = round(len(dataset[dataset['is-prediction-correct'] == True]) / len(dataset) * 100 , 3)

a.append(accuracy)

p.append(allowed_percent_error)

print(r)

allowed_percent_error = allowed_percent_error + step_size

dataset_results = pd.DataFrame()

dataset_results['Accuracy'] = a

dataset_results['Threshold'] = p

dataset_results['# Correct Predictions'] = number_correct_at_threshold

dataset_results['# Incorrect Predictions'] = number_incorrect_at_threshold

qgrid.show_grid(dataset_results,show_toolbar=True)

which renders:

In addition I plan to sum the distance from each predicton to the actual value at each threshold to gain more information as to how well the algorithm is performing.

Are there other measures I can use to measure the accuracy of regression predictions ?