Reproduction of your problem

I can reproduce your result with the following R code:

library(dHSIC)

######## function to generate a time series

### for the Ornstein-Uhlenbeck processes using Euler-Maruyama method

### time = length of time interval of time series

### n = number of points in time series

### mu = drift term (long term mean level)

### theta = speed of reversion

### sigma = noise level

#######

timeseries <- function(time,n,mu,theta,sigma) {

# differential equation

# dX = theta(\mu-X) dt + sigma dW

### initialization x[1]

###

### Var(x) = Var(x) (1-theta*dt) + sigma^2*dt

### Var(x) = sigma^2/theta

x <- rnorm(n = 1, mean = mu, sd = sqrt(sigma^2/theta))

dt <- time/n

#### add new x[i+1]

for (i in 1:n) {

### compute next value

xlast <- tail(x,1)

xnew <- xlast + theta*(mu-xlast)*dt + sigma * rnorm(n = 1, mean = 0, sd = sqrt(dt))

x <- c(x,xnew) ### add new value to the array

}

x

}

#### compute 4 time series

set.seed(1)

t <- seq(0,1,1/1000)

x1 <- timeseries(1, 1000, 0, 10, 2)

x2 <- timeseries(1, 1000, 0, 10, 2)

x3 <- timeseries(1, 1000, 0, 10, 2)

x4 <- timeseries(1, 1000, 0, 10, 2)

### place in matrix form (does not need to be multiple columns)

m1 <- matrix(x1,ncol = 1)

m2 <- matrix(x2,ncol = 1)

m3 <- matrix(x3,ncol = 1)

m4 <- matrix(x4,ncol = 1)

### perform independence test

dhsic.test(list(m1,m2,m3,m4), method="permutation", kernel=c("gaussian"), B=1000)

### plot the time series

plot(t,x1,type = "l", ylim = c(-1.5,1.5))

lines(t,x2, col = 2)

lines(t,x3, col = 3)

lines(t,x4, col = 4)

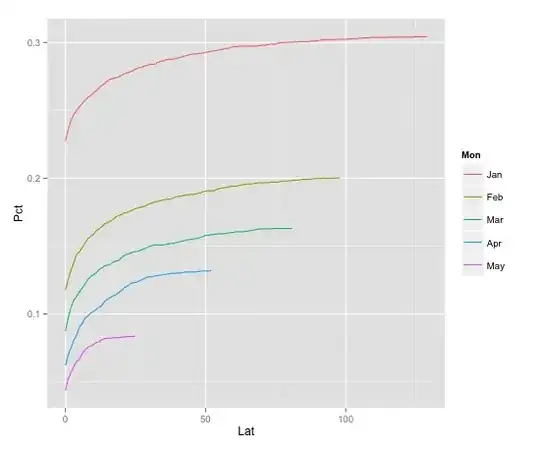

This gives a p-value of 0.00990099 and the time series look as following:

Explanation: effective degrees of freedom

I do not know much of the details of the dHSIC method (I still need to read through the article). However, we can already argue that in general methods for independence might underestimate the p-value.

For instance, a test for the correlation coefficient, cor.test(x1,x2, method = "pearson"), does also give very low p-values.

The reason is that the time series have auto-correlation. You can see this for instance in the image above where on the right between 0.8 and 1 seconds the red, black and blue curves are sort of stuck near -0.5.

However, many tests for independence are assuming that the values within the time series are not correlated. The influence that this has is in the effective degrees of freedom.

If you have correlated measurements instead of independent measurements, then you are gonna get more often a high correlation between time series due to random fluctuations.

Intuition with a simplified example: Imagine the following two extreme situations.

We have two samples $X_i$ and $Y_i$ of size $n=1000$.

Each $X_i$ and $Y_i$ is independent:

$$X_i, Y_i \sim N(0,1)$$

This gives a table like:

i X Y

1 -2.05 0.17

2 -0.86 1.56

3 -0.39 -0.08

4 0.09 1.05

5 0.18 1.84

... ... ...

1000 0.19 -1.26

We have two samples $X_i$ and $Y_i$ of size $n=1000$.

Each block of 100 samples is equal. $X_i = \mu_{X,j}$ and $Y_i =

\mu_{Y,j}$. Where $j = \lfloor i/100 \rfloor$.

The values of the blocks $\mu_{X,j}$ and $\mu_{X,j}$ are independent:

$$\mu_{X,j}, \mu_{Y,j} \sim N(0,1)$$

This gives a table like:

i X Y

1 -2.05 0.17

2 -2.05 0.17

3 -2.05 0.17

4 -2.05 0.17

5 -2.05 0.17

... ... ...

998 0.19 -1.26

999 0.19 -1.26

1000 0.19 -1.26

This latter example has still time series that are independent of each other. The ten values $\mu_{X,j}$ and $\mu_{Y,j}$ are independent. However, the testing assumes that the sample consists of 1000 independent realizations instead of 1000 correlated realizations. This has an influence on the sample distribution of the correlation coefficients (or other statistics that relate to independence) and on the computation of related p-values.

There will always be some correlation due to random fluctuations. These random fluctuations are different for ten values than for thousand values. The time series with correlated values are a lot like that. The auto-correlation has an effect on the random fluctuations in the correlation between different time-series. These random fluctuations are assumed to be lower when the assumption is that all 1000 rows/measurements are independent.

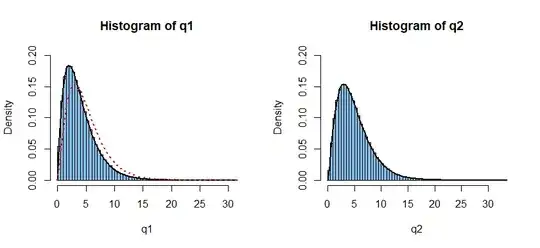

See also the difference in the time series when we look at the original sample or at a completely shuffled sample:

In the first/upper graph you see that the time series have a tendency to occassinaly move along with each other. That is due to random behaviour. However, the test assumes a much more extreme random behaviour and that is the behaviour in the second/lower graph which is for completely shuffled curves.

plot(t,x1,type = "l", ylim = c(-1.5,1.5), xlim = c(0.85,0.95), main = "original sample")

lines(t,x2, col = 2)

lines(t,x3, col = 3)

lines(t,x4, col = 4)

plot(t,sample(x1),type = "l", ylim = c(-1.5,1.5), xlim = c(0.85,0.95), main = "shuffled sample")

lines(t,sample(x2), col = 2)

lines(t,sample(x3), col = 3)

lines(t,sample(x4), col = 4)