Not due to mean reverting behaviour

I agree with sp59b2 that a longer time and larger number will improve the results.

However, I do not believe that it is due to the mean reverting behaviour at the beginning of the time series when the starting point is far away. This is because you initialize the first point such that it is already distributed as the limiting long run distribution.

(although I would argue that the variance is $\sigma^2/\theta$ instead of $\sigma^2/(2\theta)$, based on the equation $\text{Var}(x) = \text{Var}(x) (1-\theta \Delta t) + \sigma^2 \Delta t$, but anyway it doesn't seem to be the reason for the high p-values, if you correctly initialize and have not the initial phase of reverting to the mean, then you still get the high p-values)

Instead, the reason why longer runs will be more likely to make you reject the null hypothesis is because of more power.

Power

the null hypothesis (of non-stationarity) is typically accepted with high p-value

When you get a high p-value then this does not mean that you accept the null hypothesis.

Instead, it just means that you do not (can not) reject the null hypothesis.

It means that either the null hypothesis is true, or your effect (the difference from the unit root) is not large enough in order to be differentiated from a unit root.

Either with a larger effect (smaller root) or with a larger amount of data will you be able to better (more likely) detect the stationarity.

When the null hypothesis is true, when you have a unit root, then the p-value should be uniform distributed, and the probability to reject the test should equal that $\alpha$ level.

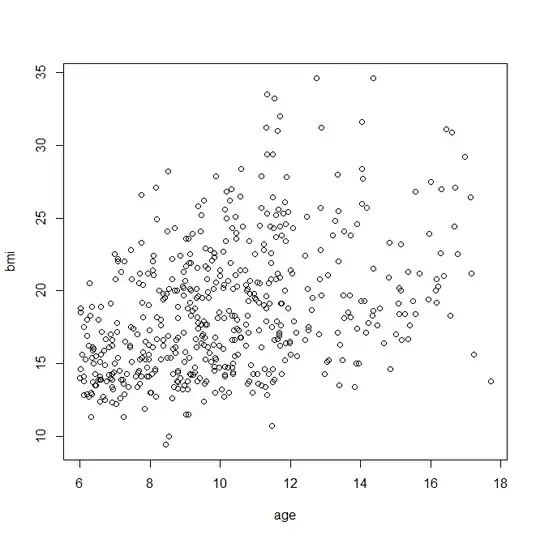

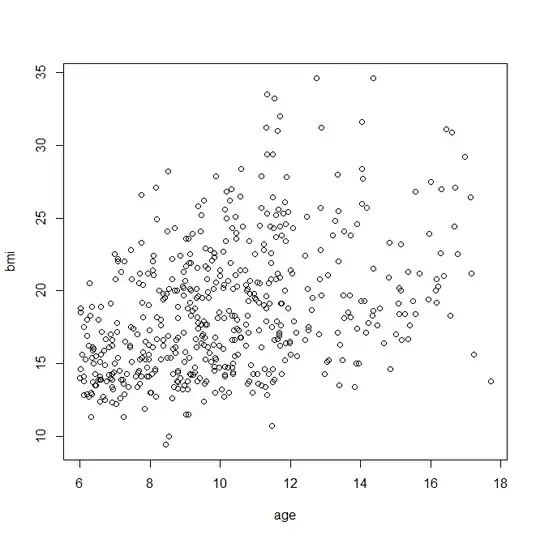

However, when the null hypothesis is not true, then you can still be arbitrarily close to the null hypothesis (a root that is very close to unit root) which means that you won't reject the test with much more probability that the $\alpha$ level. See for instance the graph below which shows estimates of the power as function of $\theta$. If $\theta$ is close to 0 then the rejection probability will be close to the rejection probability of the null hypothesis (in this case 0.05). When $\theta$ increases (when the alternative is more different from the null) then you get also more probability that the test will reject the null hypothesis.

### function timeseries() from https://stats.stackexchange.com/a/491928

### compute for 100 different values of theta

mup <- rep(0,100)

for (i in 1:100) {

### count the rejections out of hundred tests

mup[i] <- sum( replicate(10^2, tseries::adf.test( timeseries(time = 1, n = 1000, mu = 0, theta = i, sigma = 2) )$p.value < 0.05))

}

plot(1:100, mup/100,

xlab = expression(theta), ylab = "fraction of rejections p<0.05")

P-value calculation

In addition, with the Dickey-Fuller test the p-values are computed in a particular way that makes them not exact. What you are effectively doing is some sort of linear model.

$$(x_t-x_{t-1}) = \alpha x_t + \beta_0 + \beta_1 t + \epsilon_i$$

and the assumption is $\alpha = 0$. The Dickey-Fuller test uses the same t-value of the coeffient as normally computed for linear regression. However the p-value is not based on the t-distribution (this is because those residual terms $\epsilon$ are not independent, if some $x_t$ is higher/lower due to randomness, then this will influence both residuals $\epsilon_i$ and $\epsilon_{i+1}$ in the regression).

The p-values are computed from interpolation of tables, and this might potentially influence the p-value (Although I believe that this is not really having a strong effect. But, just to be complete).