The comparison only makes sense when you can logit-transform observed proportions, which means you can't do it on 0-1 data, you must already have a set of proportions $p_i=X_i/n_i$ where none of the $X_i$'s are $0$ or $n_i$.

At heart, the distributional assumptions differ, so the likelihood function that is maximized is different; naturally if you optimize different functions of the data then the resulting parameter estimates (etc) will differ.

However, let's look in more basic terms by just considering the first couple of moments. When you apply a nonlinear transformation you impact the mean (introduce a bias relative to the original scale) and the variance (which affects relative weight on data). However, I'm not going to go through the algebra, and just show what happens when we look at the impact on transforming a binomial proportion to the logit scale.

The variance-stabilizing transform for the binomial is the arcsin-square root ($\sin^{-1}(\sqrt{p})$), which is different from the logit; relatively speaking* the logit pulls the most extreme values more strongly than the variance-stabilizing transformation does, which would be expected to make the variances get bigger near the ends.

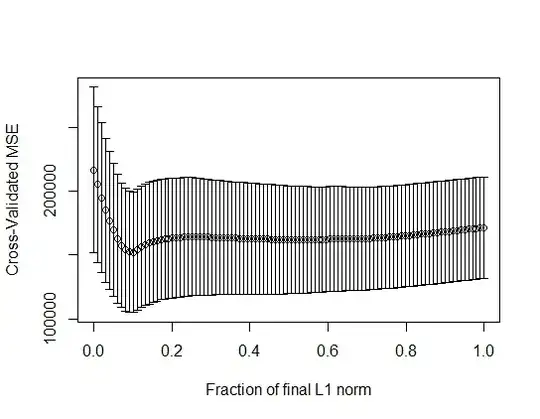

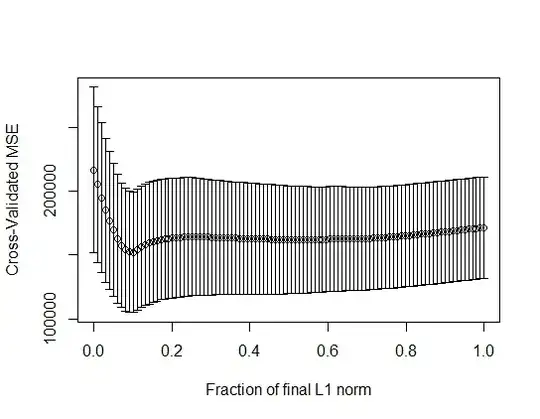

If we generate a lot of binomial data across a range of values for the proportion that actually has a logistic expected value, and then logit-transform it, you can see the variance is not constant on the logit scale, even though your linear model fit treats it as if it were:

The spread is indeed wider near the ends than it is in the middle, as we anticipated. As a result the linear model on the logit scale puts (relatively) too much weight on the extreme values, which are noisier and so should be downweighted.

We also see in the plot that near the extremes, the transformation isn't close to symmetric - when the population proportion is near to either end, a (by chance) more-extreme-than-expected proportion gets pulled more strongly out (more extreme).

Note that $E(t(Y)) \neq t(E(Y))$ so when you apply a logit transform and fit a linear regression you introduce a (typically small) bias term; if you compare them on the original (proportion) scale the bias is small near the middle but becomes progressively larger. So for example, coefficients will be expected to be pushed away from 0 on average (assuming the cases of p=0 and p=1 are restricted to never occur, even though they're certainly possible).

If your proportions are all in the middle half, you would typically see only very small effects, but as proportions get more extreme, these effects all become more noticeable.

* in particular, compare a plot of $4\sin^{-1}(\sqrt{p})-\pi$ with $\operatorname{logit}(p)$