I have long been struggling with setting a valid threshold t for predicting my binary logistic model and hereafter evaluate how well it performs (see code below). I believed setting a threshold for binary prediction was more subjective than statistical. After reading both Stephan Kolassa's and Tamas Ferenci's thoughts here and here, I have been confirmed that setting a threshold is more towards a decision theoretic aspect than statistically. However, I have no prior knowledge in that field.

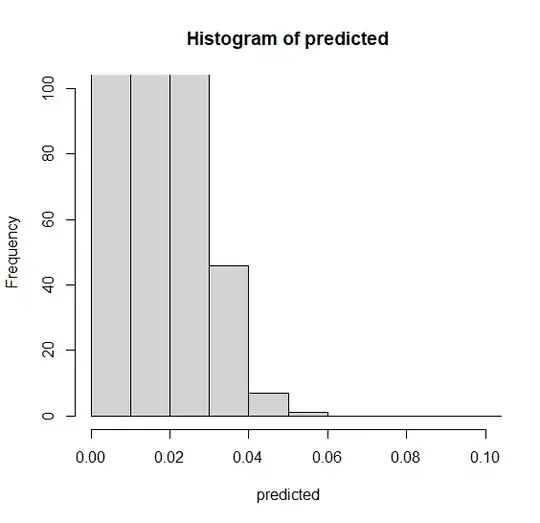

So, assume I have to predict the outcome of whether a fire occurs or not. I first run my ElasticNet model on my training data and then evaluate based on my test data. I come to a point where I have to set a threshold for my binary outcome to be either 0 (no fire) or 1 (fire) (notice the data is highly imbalanced, hence, the low threshold, see code). Predicting 0's as 1's and vice versa are not the end of the world in my case, like predicting cancer as no-cancer in the medical world, but it still makes a substantial differences if I choose t = 0.0012 or t = 0.0007.

Note about the data:

It consists of 25 variables and 620 000 observations all on a continuous scale except the dependent variable which is factorial. One could use the iris dataset with only two outcomes in dependent variable to simulate my dataset.

set.seed(123)

model <- cv.glmnet(x.train, y.train, type.measure = c("auc"), alpha = i/10, family = "binomial", parallel = TRUE)

predicted <- predict(model, s = "lambda.1se", newx = x.test, type = “response”)

auc <- model$cvm

t <- 0.001

predict_binary <- ifelse(predicted > t, 1, 0)

CM <- confusionMatrix(as.factor(predict_binary), as.factor(y.test))

COEFFICIENTS

(Intercept) -1.212497e+01

V1 -4.090224e-03

V2 -6.449927e-04

V3 -2.369445e-04

V4 9.629067e-03

V5 4.987248e-02

V6 .

V7 -1.254231e-02

V8 .

V9 5.330301e-06

V10 .

V11 7.795364e-03

V12 .

Dependent on the threshold set by t, I get the following confusion matrices.

t = 0.001 t = 0.0012 t = 0.0007

Reference Reference Reference

Prediction 0 1 Prediction 0 1 Prediction 0 1

0 107019 15 0 109857 17 0 99836 11

1 17039 32 1 14201 30 1 24222 36

- How can one justify choosing one threshold value over another?

- How can one optimize the prediction of true positive while minimizing the prediction of false positive?

- Is there any way in R for choosing a 'best' threshold for binary outcomes?