It is shown in answer here and at other places that difference of 2 random variables will be correlated with baseline. Hence baseline should not be a predictor for change in regression equations. It can be checked with R code below:

> N=200

> x1 <- rnorm(N, 50, 10)

> x2 <- rnorm(N, 50, 10)

> change = x2 - x1

> summary(lm(change ~ x1))

Call:

lm(formula = change ~ x1)

Residuals:

Min 1Q Median 3Q Max

-28.3658 -8.5504 -0.3778 7.9728 27.5865

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 50.78524 3.67257 13.83 <0.0000000000000002 ***

x1 -1.03594 0.07241 -14.31 <0.0000000000000002 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 10.93 on 198 degrees of freedom

Multiple R-squared: 0.5083, Adjusted R-squared: 0.5058

F-statistic: 204.7 on 1 and 198 DF, p-value: < 0.00000000000000022

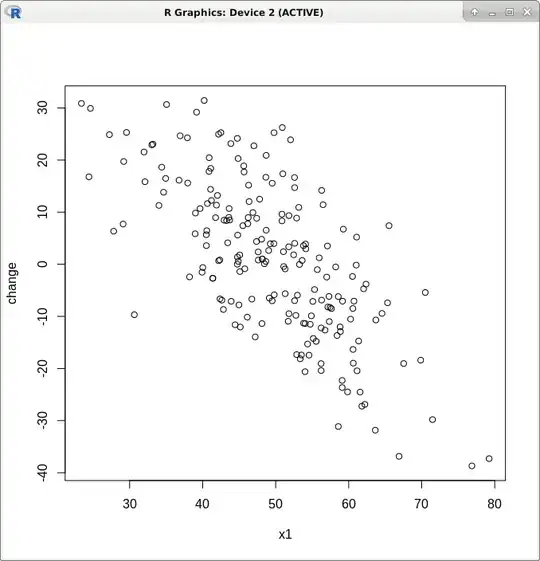

The plot between x1 (baseline) and change shows an inverse relation:

However, in many studies (especially, biomedical) baseline is kept as a covariate with change as outcome. This is because intuitively it is thought that change brought about by effective interventions may or may not be related to baseline level. Hence, they are kept in regression equation.

I have following questions in this regard:

Is there any mathematical proof showing that changes (random or those caused by effective interventions) always correlate with baseline? Does it occur only in some circumstances or is it a universal phenomenon? Is distribution of data related to this?

Also, does keeping baseline as one predictor of change affects results for other predictors which are not having any interaction with baseline? For example in regression equation:

change ~ baseline + age + gender. Will results for age and gender be invalid in this analysis?Is there any way to correct for this effect, if there is a biological reason to think that change may DIRECTLY related to baseline (quite common in biological systems)?

Thanks for your insight.

Edit: I probably should have labelled x1 and x2 as y1 and y2 since were discussing response.

Some links on this subject:

Difference between Repeated measures ANOVA, ANCOVA and Linear mixed effects model

What are the worst (commonly adopted) ideas/principles in statistics?

What are the worst (commonly adopted) ideas/principles in statistics?