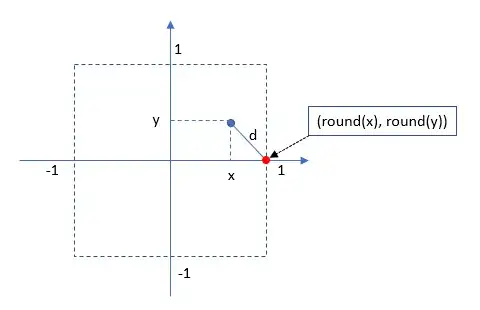

I would like to know how to model errors introduced by rounding. For example:

Suppose that

- $n$ pairs $(x, y)$ are such as $x$ and $y$ are drawn from a uniform distribution $[-1, 1 [$ and

- $d$ is given by the Euclidian Distance between $(x, y)$ and respective pair $(round(x), round(y))$.

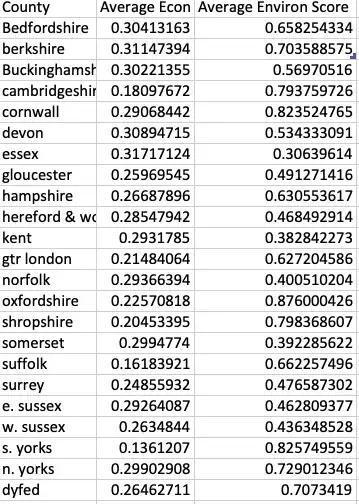

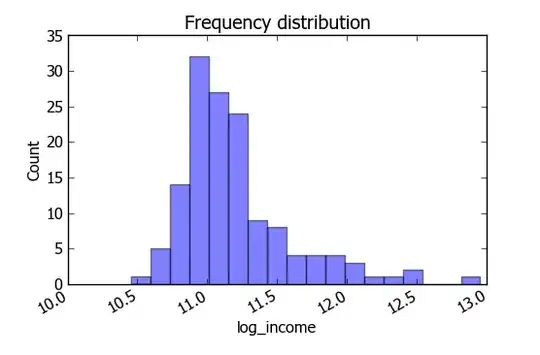

By histogramming the list of $d$'s results in:

As we can see this distribution is skewed. In my experiments, I checked out the shape of $d$ for 3, 4, 5 variables (see the plots below). I realized that the behavior is always the same:

My questions are:

- Why is this distribution skewed?

- Which distribution should be suitable to model $d$? Is Log-normal the best option?

I understand that Central Limit Theorem is valid for sums of random variables and the Euclidian Distance is not a regular sum. Thus, what should we use to model d?

For clarify, my R script is:

size <- 1000000

x <- runif(size , -1, 1)

y <- runif(size , -1, 1)

z <- runif(size , -1, 1)

w <- runif(size , -1, 1)

t <- runif(size , -1, 1)

x.round <- round(x)

y.round <- round(y)

z.round <- round(z)

w.round <- round(w)

t.round <- round(t)

d.x <- abs(x - x.round)

d.x.y <- sqrt((x - x.round)^2 + (y - y.round)^2)

d.x.y.z <- sqrt((x - x.round)^2 + (y - y.round)^2 + (z - z.round)^2)

d.x.y.z.w <- sqrt((x - x.round)^2 + (y - y.round)^2 + (z - z.round)^2 + (w - w.round)^2)

d.x.y.z.w.t <- sqrt((x - x.round)^2 + (y - y.round)^2 + (z - z.round)^2 + (w - w.round)^2 + (t - t.round)^2)

par(mfrow=c(3,2))

hist(d.x)

hist(d.x.y)

hist(d.x.y.z)

hist(d.x.y.z.w)

hist(d.x.y.z.w.t)