I'm trying to build a logistic regression using glm() to match a given equation using a randomly generated dataset, and for some reason, the result is way off.

Here's the code:

rm( list = ls() )

set.seed( 8675309 )

x <- 10:50

y <- exp( -3.428013 + 0.053240 * x )

plot( x, y, type='l' )

n_per_x <- 10000

p <- rep( y, n_per_x )

x_new <- rep( x, n_per_x )

y_new <- ifelse( runif( length( p ) ) <= p, 1, 0 )

my_data <- data.frame( X = x_new, Y = y_new )

for ( i in x ) {

yp = sum( my_data$Y[ my_data$X == i ] )

points( i, yp / n_per_x, pch = 16, col = 'red' )

}

my_model <- glm( Y ~ X, family = 'binomial', data = my_data )

print( summary( my_model ) )

y2 = predict( my_model, data.frame( X = x ) )

lines( x, exp( y2 ), col = 'red' )

Here is the result:

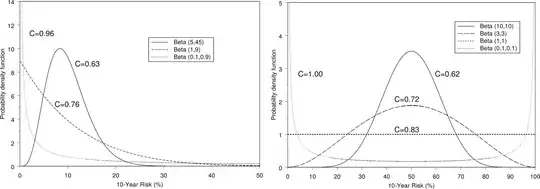

The plot shows the original equation as a black line, the fraction of random samples for each value of X that are 1 as red dots, and the logistic fit line for the random sample in red.

Why is the fit so poor? Unfortunately, I don't feel like I know enough to know whether I've just made a dumb mistake in R or I've fundamentally misunderstood logistic regression.

What's confusing me is that the fit curve doesn't seem to move at all above very small values of n. If it was just randomness, I would expect it to converge as n gets larger. But it doesn't. The standard error gets smaller and smaller as the number of samples increases (expected), but the coefficients barely move at all (not expected), rapidly leaving the actual values way outside the confidence interval.

Thanks for any insight into where I've gone wrong!