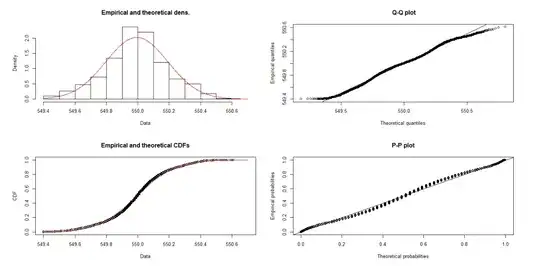

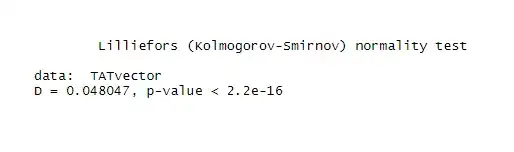

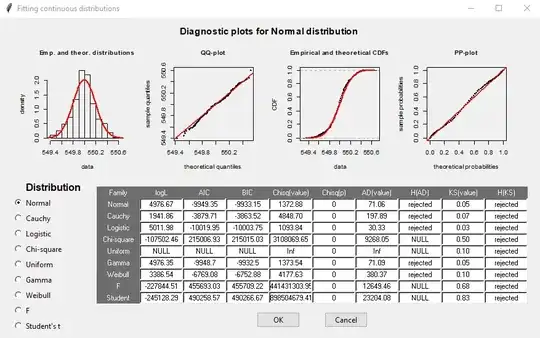

If you have 26K data, any test on a given distribution will fail. Because for that much data, the testing can detect tiny difference and report it is not coming from that distribution.

I would strongly recommend you to read these posts

Are large data sets inappropriate for hypothesis testing?

Is normality testing 'essentially useless'?

It is very common that data is not coming from any distribution in text book. But we still can do a lot with it.

For example we can fit data with Mixture of Gaussian model.

In addition, the distribution of your data seems too good (that coming from normal distribution) that may be coming from some simulation but not from the real world. I would suggest to do following thing: draw 26K sample from normal distribution and run the hypothesis test and all the plots to see the results. This is probability what was happening in your case.