Issue:

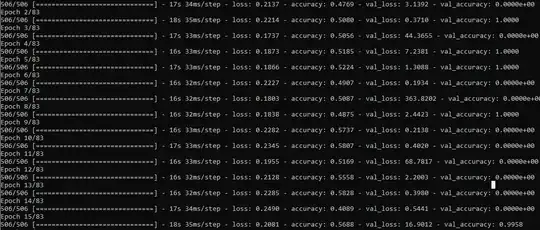

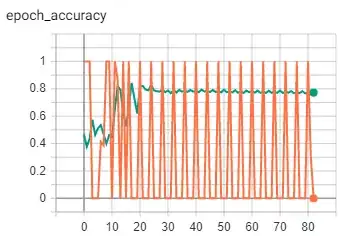

My validation accuracy jumps between 0% and 100%.

This seems fairly implausible to me because the predictions are between two classes only and I am validating on full valSet (41802 records) each epoch. I doubt that a model which just started learning gets 100% accuracy on one epoch and then 0% accuracy the next epoch.

Code samples:

I am validating on the full valSet per epoch.

This is achieved by using validation_steps = {steps for full validation file} and dataset = dataset.repeat(numEpochsTrainSet)

all my datasets are produced with the following code

def modelDataset(sourcepath, badgesize, repeat = False, repeatCount = 15):

#get all files

list = os.listdir(sourcepath)

pathfiles = [sourcepath+x for x in list]

#get metrics

rows_per_file = count_lines(sourcepath+"0.data")

number_of_files = len(list)

total_rows = (rows_per_file * number_of_files)

# get number of steps per Epoch

epochs = number_of_files

if epochs == 1 and repeat:

epochs = repeatCount

elif epochs == 1:

badgesize = rows_per_file

steps_per_epoch = int(rows_per_file / badgesize) # 2000 badges per epoch

# model interleaved dataset

dataset = (tf.data.Dataset.from_tensor_slices(pathfiles).interleave(lambda x:

tf.data.TextLineDataset(x).map(parse_csv, num_parallel_calls=4),

cycle_length=4, block_length=counter+1))

if epochs != 1 or epochs != repeatCount:

dataset = dataset.shuffle(buffer_size=badgesize)

if repeat:

dataset = dataset.repeat(repeatCount)

#dataset = dataset.cache().repeat(repeatCount)

dataset = dataset.batch(badgesize)

dataset = dataset.prefetch(2) # prefetch one batch

return dataset, steps_per_epoch, epochs, badgesize

# generate dataset

valSet, valSteps, maxValEpochs, valBadgeSize = modelDataset(valPath, 1, True, maxTrainEpochs)

# check if dataset is modeled correctly

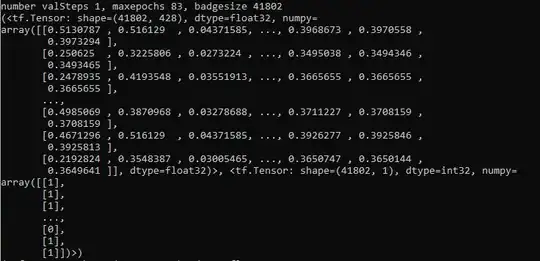

print(f"number valSteps {valSteps}, maxepochs {maxValEpochs}, badgesize {valBadgeSize}")

for elem in valSet:

print(elem)

finally, the model is fit like the following:

model.fit(trainSet,

epochs = epochs,

steps_per_epoch = trainSteps,

shuffle = True,

validation_data = valSet,

validation_steps = valSteps, # how many batches do you want to validate on, NOT how many records

class_weight=classDistr,

verbose = verbose,

callbacks = [tensorboard,reduce_lr])#,cp_callback])

Issue assumptions

I would expect such validation results in one of the following scenarios:

- I am only validating on one record per epoch

- The validation Data is inconsistent with the train data

- The validation data contains labels of all the same class

I already tried to exclude those assumption with the following steps:

- I checked the source data csv file which looks fine, it has 41802 records

- In between those val_accuracies there are some which suggest against a validation on only one record:

- output of

print(valset[0])looks fine - I found out that val-batchsize = train-batchsize so I adjusted te code but it did not make any difference