Let's try and look at this with an example before I get into my questions.

Let's say you're trying to predict the number of Oscars a movie will win.

With qualitative data we can clean and plug in a bunch of data (e.g. box office opening, rotten tomato score, days released before oscars etc), throw that in an algorithm (e.g. Python's sciki-learn's (my preference of tookit) linear regression etc) and start making predictions. (Yes I know ML is more complicated than that but I am trying to keep this simple).

Now let's say you have a data set of qualitative data (all numerical). That is to say, for each movie we have the reviews on each movie from a number of judges. Each judge rated the movie on a score from 1 to 5 in 5 different categories (we know which judge rated which movie what score). To make things more interesting judges can also review a movie more than once. We do have dates of the reviews so we know the order they came in.

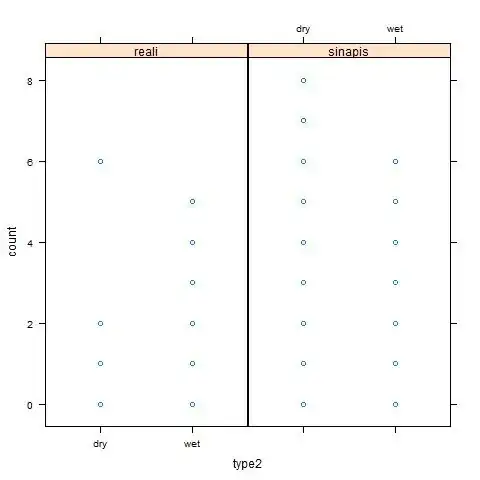

Example of a dataset

What are effective approaches to use this qualitative data for predictions (still wanting to predict number of Oscars a movie will win)? I would probably merge this data with quantitative data so I would imagine I want one data entry for each movie. Hence why I am looking at how best collapsing all the qualitative data to a single input row.

Now I am newer to ML and prediction so I am sure there are many techniques I don't know of that I can look into more to address this problems. Solutions I have thought of are:

Averaging (or taking the mean) the score in each category by all judges reviews for each movie. - Would make old and new reviews the same, judges are all equal, outliers cold effect the input

Using a time-weighted average so the newest reviews are worth more - Seems better, but judges are still all considered equal (even if they aren't all the same talent of judging). Could consider taking only the latest review from each judge.

Dropping high/low outliers. Either the judges with the highest/lowest scores across the board or just the highest/lowest in each category/column.

Are there other or better approaches I am not thinking of?

- What if we know some judges are better / worse at reviewing movies (but we don't know who)? How can we account for this judge-quality? Dropping highest and lowest reviews? Is there a way to weight the individual reviewers inputs?

Looking for ideas and approaches I can learn more about.