We can compare $2\cdot(\text{difference in log-likelihood})$ to a chi-square distribution but why can't we find or invent a new distribution whose test statistics are some function or form involving the quasilikelihood?

-

what is the distribution for comparing quasilikelihood? – Jul 23 '19 at 18:42

-

The quip answer is that if such a distribution existed, it wouldn't be *quasi*-likelihood any more, it would be likelihood. Se also https://stats.stackexchange.com/questions/409437/how-does-one-compare-two-nested-quasibinomial-glms/414474#414474 – kjetil b halvorsen Jul 23 '19 at 22:20

1 Answers

Not sure what you mean. Quasilikelihood fits don't assume any particular underlying distribution; they simply assume, by analogy with generalized linear models, that using an iteratively reweighted least squares fitting procedure with a reasonable link and mean-variance function could give a good description of data.

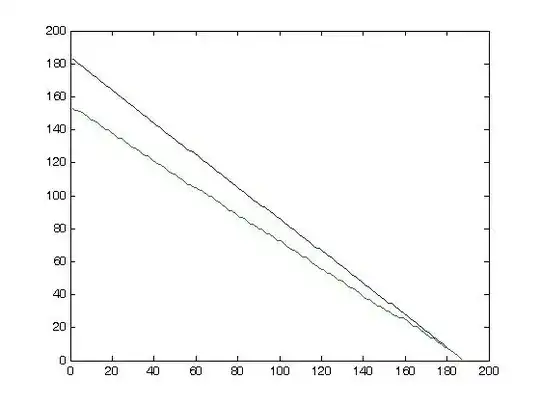

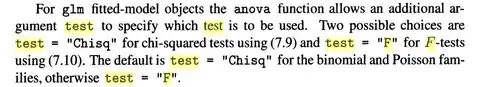

Venables and Ripley Modern Applied Statistics in S suggest that it might be OK to use an $F$ test to compare quasi-likelihood models:

(note that they don't explicitly say "this is OK in a quasi-likelihood context"). They go on to describe how this is implemented in R:

In fact, there's even an example of this procedure in example("quasipoisson") (which directs to the ?family page:

d.AD <- data.frame(treatment = gl(3,3),

outcome = gl(3,1,9),

counts = c(18,17,15, 20,10,20, 25,13,12))

glm.D93 <- glm(counts ~ outcome + treatment, d.AD, family = poisson())

## Quasipoisson: compare with above / example(glm) :

glm.qD93 <- glm(counts ~ outcome + treatment, d.AD, family = quasipoisson())

glm.qD93

anova (glm.qD93, test = "F")

I have always assumed that R's allowance of this test means that one or more members of R-core, who are generally pretty fussy statistically, thought it was OK ("there's no warning sign telling me not to do this, so I guess it must be OK ...")

I can't find much in the way of early references (I don't see anything about model comparison in a quick browse of McCullagh and Nelder's quasilikelihood chapter [9],nor in a quick glance at Wedderburn's 1974 Biometrika paper), nor do V&R say what they mean by "with some caution". This sort of procedure makes properly educated statisticians nervous because (I think) it's based on much more approximate reasoning and with less formal proof (and maybe stronger assumptions) than classic approaches like the Likelihood Ratio test.

Tjur (1998) discusses the issues and justifies the $F$ test on the basis of analogy to other simulations, but certainly doesn't try to state any rigorous results ...

Nevertheless, also in situations where [normality of the observations] is questionable, common sense suggests that it is better to perform this correction for randomness of $\hat \lambda/\lambda$ [i.e., uncertainty in the dispersion estimate] — implicitly making the (more or less incorrect) assumption that the distribution of $\hat \lambda$ is approximated well enough by a $\chi^2$-distribution with $n - p$ degrees of freedom — than not to perform any correction at all — implicitly making the (certainly incorrect) assumption that $\lambda$ is known and equal to $\hat \lambda$. This suggestion is supported by simulation studies of the behavior of $T$ versus normal test statistics in case of strongly non-normal responses, which will not be reported here (see Tjur 1995).

I'd love to see suggestions for other references supporting/discussing/exploring this approach.

Tjur, Tue. “Nonlinear Regression, Quasi Likelihood, and Overdispersion in Generalized Linear Models.” The American Statistician 52, no. 3 (1998): 222–227.

- 34,308

- 2

- 93

- 126

-

So no one can prove that the F-distribution works as a hypothesis test? But it somehow works according to the literature? – Jul 24 '19 at 22:01

-

Someone (be able to prove|have proved) it, to some level of rigor/under some conditions; I just can't point to a particular reference at the moment. – Ben Bolker Jul 24 '19 at 22:16

-

https://www.semanticscholar.org/paper/Detecting-differential-expression-in-RNA-sequence-Lund-Nettleton/dea152ac272a7371d271a1d6c80bdf2510f5367c Tjur (1998) "The QL approach then compares the ratio LRTk/(qΦˆ k) to an appropriate F-distribution, where LRTk is a quasi-likelihood ratio test statistic for the kth gene, q is the difference between the dimensions of the full and null-constraine paramete spaces, and Φˆ k is an es- timate of the dispersion for the kth gene. These suggested QL methods are analogous to ANOVA with shrunken variance estimates, where deviances are analogs to sums of squar" – Jul 24 '19 at 22:34

-

http://rachel.org/lib/thyroid-disrupters.051101.pdf "To determine whether there was a statisti- cally significant deviation from additivity, we used a quasi-likelihood ratio test to compare the empirical mixture model to the restricted additivity model based on an F-distribution." – Jul 24 '19 at 22:36

-

that's all I can find. "Note that the F -test is also a variation of the quasi-likelihood ratio test (QLR ) on non-linear two- and three-stage least squares." (http://www-siepr.stanford.edu/workp/swp01001.pdf) – Jul 24 '19 at 22:37

-

Looking at the first paper you linked, all it gives in the way of justification for the F-test is "Tjur [1998] suggests ...". See comments/quotation above from Tjur 1998. I agree that lots of people *use* this approach, but I don't see anyone trying to justify it rigorously. There are references about quasi-likelihood $F$ tests, but they all seem to be applied papers justifying it rather than theoretical papers that are trying to provide a foundation – Ben Bolker Jul 24 '19 at 22:48

-

There's some papers talking about a quasi-likelihood ratio test including https://arxiv.org/pdf/1606.00092.pdf and https://arxiv.org/pdf/1609.07958.pdf but they mean gaussian quaslikelihood ratio test and don't mention the F-distribution. – Jul 24 '19 at 23:33