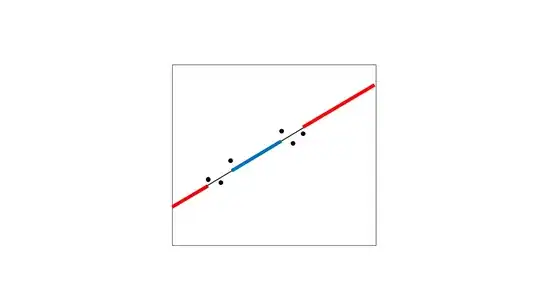

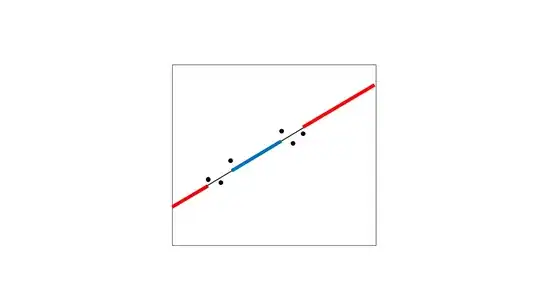

To add a visual explanation to this: let's consider a few points that you plan to model.

They look like they could be described well with a straight line, so you fit a linear regression to them:

This regression line lets you both interpolate (generate expected values in between your data points) and extrapolate (generate expected values outside the range of your data points). I've highlighted the extrapolation in red and the biggest region of interpolation in blue. To be clear, even the tiny regions between the points are interpolated, but I'm only highlighting the big one here.

Why is extrapolation generally more of a concern? Because you're usually much less certain about the shape of the relationship outside the range of your data. Consider what might happen when you collect a few more data points (hollow circles):

It turns out that the relationship was not captured well with your hypothesized relationship after all. The predictions in the extrapolated region are way off. Even if you had guessed the precise function that describes this nonlinear relationship correctly, your data did not extend over enough of a range for you to capture the nonlinearity well, so you may still have been pretty far off. Note that this is a problem not just for linear regression, but for any relationship at all - this is why extrapolation is considered dangerous.

Predictions in the interpolated region are also incorrect because of the lack of nonlinearity in the fit, but their prediction error is much lower. There's no guarantee that you won't have an unexpected relationship in between your points (i.e. the region of interpolation), but it's generally less likely.

I will add that extrapolation is not always a terrible idea - if you extrapolate a tiny bit outside the range of your data, you're probably not going to be very wrong (though it is possible!). Ancients who had no good scientific model of the world would not have been far wrong if they forecast that the sun would rise again the next day and the day after that (though one day far into the future, even this will fail).

And sometimes, extrapolation can even be informative - for example, simple short-term extrapolations of the exponential increase in atmospheric CO$_2$ have been reasonably accurate over the past few decades. If you were a student who didn't have scientific expertise but wanted a rough, short-term forecast, this would have given you fairly reasonable results. But the farther away from your data you extrapolate, the more likely your prediction is likely to fail, and fail disastrously, as described very nicely in this great thread: What is wrong with extrapolation? (thanks to @J.M.isnotastatistician for reminding me of that).

Edit based on comments: whether interpolating or extrapolating, it's always best to have some theory to ground expectations. If theory-free modelling must be done, the risk from interpolation is usually less than that from extrapolation. That said, as the gap between data points increases in magnitude, interpolation also becomes more and more fraught with risk.