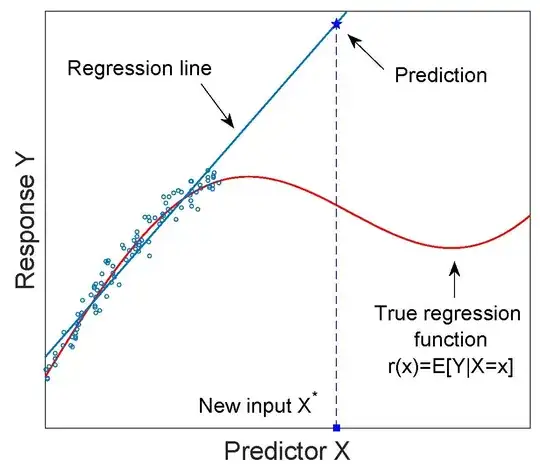

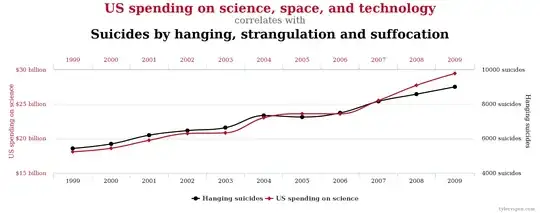

Contrary to other answers, I'd say that there is nothing wrong with extrapolation as far as it is not used in mindless way. First, notice that extrapolation is:

the process of estimating, beyond the

original observation range, the value of a variable on the basis of

its relationship with another variable.

...so it's very broad term and many different methods ranging from simple linear extrapolation, to linear regression, polynomial regression, or even some advanced time-series forecasting methods fit such definition. In fact, extrapolation, prediction and forecast are closely related. In statistics we often make predictions and forecasts. This is also what the link you refer to says:

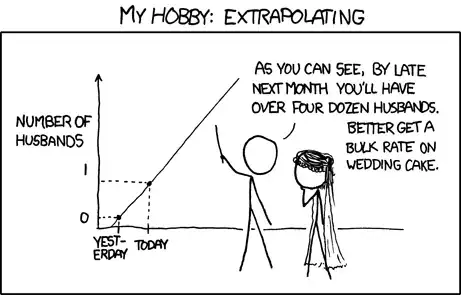

We’re taught from day 1 of statistics that extrapolation is a big

no-no, but that’s exactly what forecasting is.

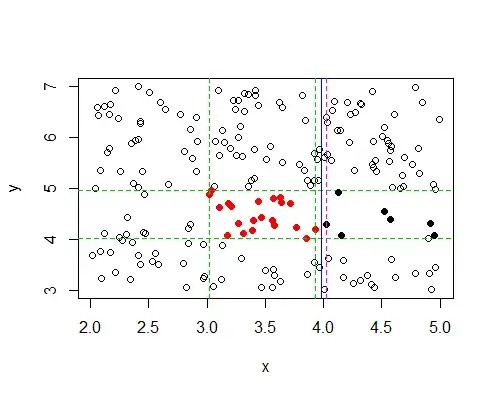

Many extrapolation methods are used for making predictions, moreover, often some simple methods work pretty well with small samples, so can be preferred then the complicated ones. The problem is, as noticed in other answers, when you use extrapolation method improperly.

For example, many studies show that the age of sexual initiation decreases over time in western countries. Take a look at a plot below about age of first intercourse in the US. If we blindly used linear regression to predict age of first intercourse we would predict it to go below zero at some number of years (accordingly with first marriage and first birth happening at some time after death)... However, if you needed to make one-year-ahead forecast, then I'd guess that linear regression would lead to pretty accurate short term predictions for the trend.

(source guttmacher.org)

Another great example comes from completely different domain, since it is about "extrapolating" for test done by Microsoft Excel, as shown below (I don't know if this is already fixed or not). I don't know the author of this image, it comes from Giphy.

All models are wrong, extrapolation is also wrong, since it wouldn't enable you to make precise predictions. As other mathematical/statistical tools it will enable you to make approximate predictions. The extent of how accurate they will be depends on quality of the data that you have, using methods adequate for your problem, the assumptions you made while defining your model and many other factors. But this doesn't mean that we can't use such methods. We can, but we need to remember about their limitations and should assess their quality for a given problem.