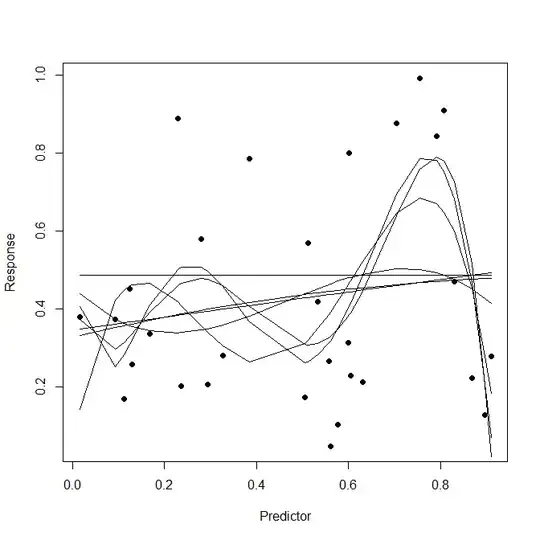

Suppose we have a regression model, and we want to fit this to training data, and then make predictions on test data. There is a well-known danger that out-of-sample predictions will be poor, due to "over-fitting" of the model to the training data.$^\dagger$ If I understand it correctly, the phenomenon of over-fitting occurs because (1) the fitting method involves optimisation of in-sample prediction taken over the training data, and thus conforms excessively to this data; or (2) the model selection method does not adequately penalise complexity. Some analysts use cross-validation on a "validation set" to deal with over-fitting, which still involves an optimisation, but the optimisation is now done using out-of-sample predictions (i.e., the regression model is fit with the training data, but the prediction errors are obtained from the validation data).

It occurs to me that there are already well-known methods for fitting a regression model on a "leave-one-out" basis (e.g., for linear regression we can minimise the LOOCV statistic instead of minimising the residual-sum-of-squares). So the obvious question is, why bother with a "validation" step. Why not just use this leave-one-out fitting method on the training data to begin with, so that we optimise out-of-sample prediction? In combination with an appropriate way to penalise model complexity (e.g., by using partial F-tests for model terms), that would seem to deal with over-fitting, since while still using all available data points for the fit in each case (by only leaving one out, rather than leaving out a whole "validation set").

My questions: Am I right that over-fitting is caused by the fact that we optimise based on errors from in-sample predictions? If so, then does the use of optimisation based on errors from out-of-sample prediction avoid over-fitting? For example, if we fit a linear regression by minimising LOOCV (and with model selection that tests inclusion of model terms), is that enough to avoid over-fitting?

$^\dagger$ There is an excellent discussion of over-fitting in Chapter 7 of Hastie, Tibshirani and Friedman (2016), examined within the context of a linear regression model with prediction error measured by squared-error loss. For this loss function they link the phenomenon of over-fitting to the bias-variance trade-off. There are also some answers on this site (e.g., here) that discuss over-fitting in terms of the bias-variance trade-off.