Taking an OLS model (actually, is it a "model" or an "estimator"?) as an example, there are several assumptions (such as strict exogeneity and spherical errors) which are important for the consistency of the estimate and efficiency of the estimator.

It is clear that if we are interested in making inference about the influence that different predictors have on the outcome, the consistency property is crucial. However, if we are only interested in making predictions by applying the estimated linear relationship to a different set of observations (where we don't expect significant differences between samples, e.g. approximately the same range), do we really care that much about consistency?

Add 1

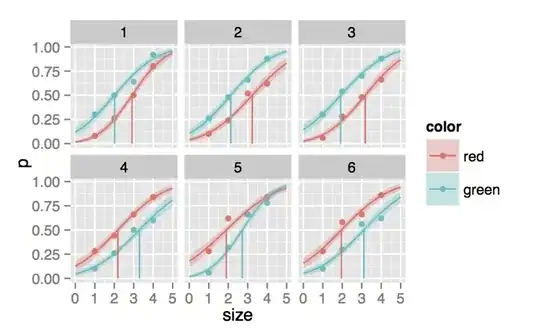

I did some simulations comparing out-of-sample MSE of OLS, maximum likelihood (MLE), and method of moments (MM) (i.e. using sample moments) for a model given by

$$y_i = x_i \exp(\sigma z_i)$$

with $\sigma = 0.5$ and equally-spaced $x \in [1,10]$ for $n \in \{2^k, k=4,...12\}$. The expected value of $y$ is linear in $x$

$$E[y|x] = x\exp(0.5 \sigma^2)$$

but the errors are not exogenous

$$E[\epsilon | x] = E[a + bx - y| x] = E[a + bx - x\exp(\sigma z)| x] = a+bx-x\exp(0.5 \sigma^2) \ne 0$$

and not spherical

$$Var[\epsilon | x] = Var[a + bx - y| x] = Var[y| x] = x^2 Var[\exp(\sigma z)| x] = x^2 (e^{\sigma^2}-1) e^{\sigma^2}$$

For MLE and MM, the correct log-normal model was specified. The results are shown in the plot below which shows mean MSE. For this set up, the OLS underperforms for samples less than 128, but the standard deviation of MSE is around 10% smaller for $n \le 32$.