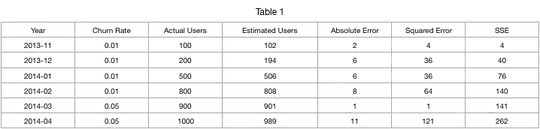

I have an Excel model which predicts the number of customers for a given month. The prediction depends on a churn rate. I have the absolute error (actual vs predicted), along with squared error and sum of square error.

My question is:

Would it better to find a churn rate that minimizes the absolute for each period (year, month) or find a churn rate that minimizes the sum of squared errors? Does the former even make sense to do?