I am attempting to find the most appropriate way of analysing a set of skewed data, and I'm torn between two different approaches (each approach appears to offer its own advantages and disadvantages). I'm hoping that somebody might be able to offer some advice.

My question is: given a highly skewed data set, are the following two approaches sensible and roughly equivalent to each other?

- Perform an appropriate transformation on the response variable, and then fit a (nested) linear mixed model to the transformed data.

- Calculate within-subject medians, and then perform a (paired) t-test on the medians.

I now provide a more detailed description of my data and the two proposed approaches, along with a working example in R.

Description of data

I have n=6 subjects, where each subject contains a population of cells. Each cell is of a specific type, and there are only two possible types. Given a sample of cells within each subject, I have measured the speed of each cell, and I would like to test whether or not there is evidence that cells of one type tend to have a higher speed than cells of the other type.

One important consideration is that my data set is unbalanced (one cell type has between 15 and 70 observations per subject, whilst the other cell type has between 100 and 500 observations per subject).

Possible methods of analysis

Method 1: Data transformation followed by linear mixed model

Since the data are skewed, it seems like a sensible idea to perform some kind of transformation in an attempt to make the data as normal as possible. Using the Box Cox transformation, I was able to find a suitable transformation to serve this purpose. I then proceeded to fit a linear mixed model, in which "cell type" is treated as a fixed effect, "subject" is treated as a random effect (since observations within a subject are not independent of one another), and "cell type" is nested within "subject", viz. $$f(\mbox{value}) \sim \mbox{type} + (1|\mbox{subject}/\mbox{type}),$$ where $f(\mbox{value})$ denotes the Box Cox transformation of value.

Upon fitting this mixed model to my data, I was able to conclude that there is evidence of a difference in the mean of $f(\mbox{value})$ between the two cell types -- which, in turn, allows me to conclude that there is evidence of a difference in median speed between the two cell types. The assumptions of the mixed model appear to be satisfied.

Overall, this method seems to be suitable for analysing my data. However, since it involves analysing transformed data, I can't quantify the effect size on anything other than the transformed scale. If possible, I would like to be able to make some sort of statement regarding the magnitude of the effect size. This leads me on to the second method that is under consideration...

Method 2: Calculate within-subject medians and perform a paired t-test

Since we are dealing with skewed data, the median value is probably a more appropriate measure of central tendency than the mean. Moreover, since we have a relatively small number of observations for some subjects, the median will be more robust to outliers. I therefore begin by taking the median speed across all cells of a given type within each subject. This results in six pairs of medians (one pair for each subject, where each pair comprises a median for each of the two cell types). We can then perform a paired t-test on the resulting pairs of medians.

This method has the benefit of working entirely on the natural (untransformed) scale, thereby allowing us to quantify the typical difference in median speed between the two cell types in addition to testing for a statistically significant difference. It's also a much simpler approach than fitting mixed models, so all things being equal it would be my preferred choice.

Specific questions

I am concerned that I might be making some dangerous assumptions for Method 2. For example

- Is it appropriate to work with subject-medians when the data are skewed and some groups comprise few (~15) data points?

- Does the unbalanced nature of the study (with far fewer observations for one cell type than the other) pose any problems when performing a paired t-test on subject-medians?

Both methods produce very similar results in terms of statistical significance for my own data and a representative dummy data set that I have prepared (see below), which suggests that I'm (hopefully) not doing anything horribly wrong. But I would really appreciate some advice or reassurance that Method 2 is appropriate in this case.

Complete R code, using representative dummy data

The below R code outlines both of my proposed methods. Note that both methods produce similar results in terms of statistical significance.

# Load required libraries

library(beeswarm)

library(MASS)

library(nlme)

# Generate dummy data

# (N.B. unbalanced design -- n is much smaller in type 1)

# type 1:

n1 <- c(15,15,20,70,25,35)

m1 <- c(-1.9,-1.3,-1.4,-1.6,-1.1,-1.7)

s1 <- c(0.6,0.5,0.8,1,0.7,0.9)

# type 2:

n2 <- c(100,200,250,480,130,270)

m2 <- c(-2,-1.9,-1.7,-2.9,-2.2,-2.2)

s2 <- c(0.8,1.1,1,1,1.2,1.1)

# Set random number seed and populate data frame:

set.seed(123)

dat <- data.frame(x=NULL,subject=NULL,type=NULL)

for(i in 1:6){

dat <- rbind(dat,

cbind(x=rnorm(n1[i],m1[i],s1[i]),

subject=i,

type=1))

dat <- rbind(dat,

cbind(x=rnorm(n2[i],m2[i],s2[i]),

subject=i,

type=2))

}

# Subject and type are factors

dat$subject <- factor(dat$subject)

dat$type <- factor(dat$type)

# Transform the data (inverse of Box Cox power transformation)

lambda <- 0.155

dat$x <- (1 + lambda*dat$x)**(1/lambda)

# Data preparation complete.

# Method 1: Transform the data and fit a linear mixed model

# to the transformed data.

# 1.1 Find an appropriate Box Cox transformation

bc <- boxcox(x ~ type+subject,data=dat,lambda=seq(-2,2,by=0.005))

lambda <- bc$x[which(bc$y==max(bc$y))]

dat$bc <- (dat$x**lambda - 1)/lambda

# 1.2 Fit a linear mixed model, where type is nested within subject

lmm <- lme(bc~type,

random= ~ 1 | subject/type,

data=dat)

# 1.3 Inspect the results ('type' has p-value 0.0322)

summary(lmm)

# 1.4 Check residuals for normality (all okay)

qqnorm(residuals(lmm))

qqline(residuals(lmm))

# Method 2: Calculate the median of each subject using the

# untransformed data and perform a paired t-test on

# the two resulting groups of medians.

# 2.1 Calculate the median of each type within each subject

meds <- aggregate(x~subject+type,data=dat,median)

# 2.2 Visually inspect the distribution of medians

beeswarm(x~type,data=meds,pch=21,pwbg=as.numeric(meds$subject))

# 2.3 Calculate paired differences between medians

g1 <- meds$x[which(meds$type==1)]

g2 <- meds$x[which(meds$type==2)]

diffs <- g1-g2

# 2.4 perform a one sample t-test ('type' has p-value 0.03705)

t.test(diffs)

EDIT

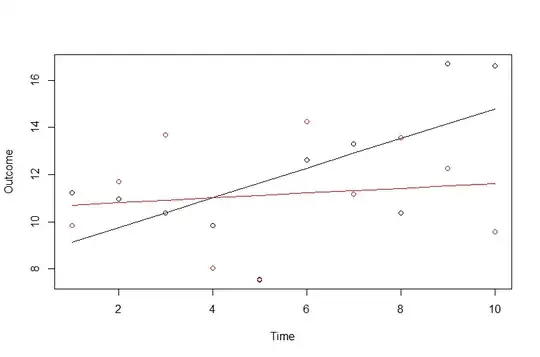

Following an incredibly useful discussion with Robert Long, I think it would be helpful for me to illustrate this particular study design.

Note that the six subjects can be treated as independent of one another. There are multiple cells within each subject, and each cell is one of two possible types (so there are many cells of each type within a subject, with an unbalanced split -- i.e. there are not the same number of cells of each type within a subject). Clearly, cells within the same subject are not independent of one another, and type is repeated within subjects. This is what I am attempting to capture in Method 1, by nesting type within subject (1|subject/type).