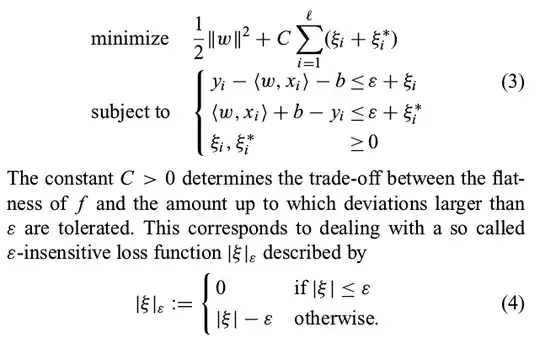

As far as I understand the value of epsilon defines the data points (the support vectors) that get included in the computations. These are the points that lay outside of the 'tube' of width 2*epsilon.

On the other hand the C in the minimization problem seems to penalize the amount of deviations from the epsilon margin. Thus, does it define the number of support vector as well? Doasn't it decrease them?

It contradict with what I experience while using sklearn.svm.SVR. I notice distinguish increase of computation time when I increase the value of C. Thus, it is contradicting with the above. Why increasing the constant C results in longer computation time?