The true parameter/regression coefficients

Linear regression assumes the model:

$$y_i = \boldsymbol{\beta} \mathbf{x_i} +\epsilon_i$$

where $\boldsymbol\beta$ is assumed fixed and only the residual term $\epsilon_i$ is assumed to be distributed according to some distribution.

So the true parameter/coefficient is assumed fixed, and is not assumed to be related to a distribution (That is in linear regression, one could think of alternative models that do express distributions for the coefficients)

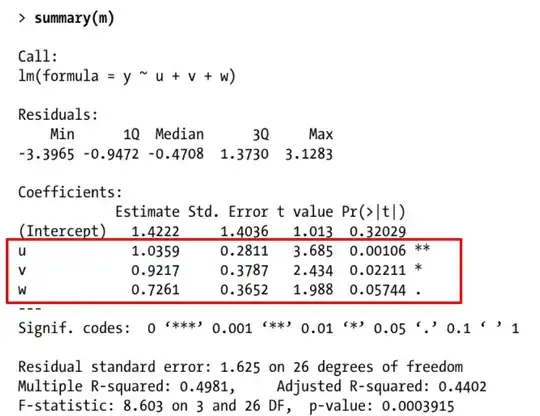

The estimated parameter/regression coefficients

While the true $\boldsymbol{\beta}$ may be fixed, the estimated $\boldsymbol{\hat\beta}$ may be considered to follow some distribution (the estimate depends on a sample/data that varies for every new experiment, thus the estimate can be considered a random variable). This leads to two different way to express the estimation of the parameter, point estimates and interval estimates, and in this difference you may find the intuition for reporting additional estimates as standard error, t-value, confidence interval:

The interval estimate gives a bit better idea about what information the data carries. It is not only an estimate for a single population parameter, but it also conveys something like the strength of the information that the data carries, ie how far other values than this single estimate, $\boldsymbol{\hat\beta}$ , could still be reasonable alternatives for the unknown parameter $\boldsymbol{\beta}$.

More data, or data with less noise, leads to a smaller deviance of the estimate $\boldsymbol{\hat\beta}$ (and this deviance can be estimated from the data), which means that not every point estimate can be considered the same. With more data or smaller noise levels the estimate is more likely 'close' to the true unknown parameter. Just a single point estimate does not convey this deviance and how 'close' the point estimate likely is.