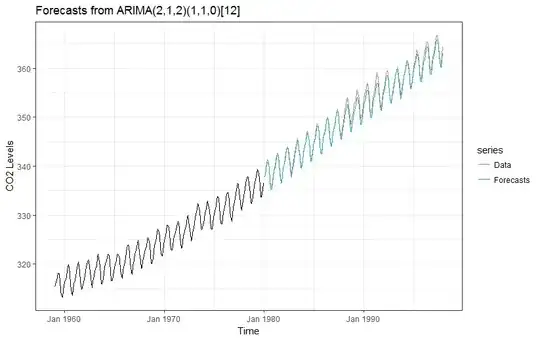

(1) You should "mix" the approaches by using a model that captures both features. When your data shows multiple features (e.g., drift and seasonality) it is a good idea to use a model that captures all of these features together. This is preferable to attempting to make ad hoc changes to a model that only captures one feature of the data. If you have a seasonal component with a fixed frequency, you can add this into your model by using an appropriate seasonal variable. In the case of monthly data with an annual seasonal component, this can be done by adding factor(month) as an explanatory variable in your model. By having both a drift term and a seasonal term in your model, you are able to estimate both effects simultaneously, in the presence of the other. You can then forecast from your fitted model without having to make ad hoc changes.

(2) Predictions are functions of observed data; they are not new data. When you want to make forward predictions in time-series data, your predictions will be functions of the observed data and the parameter estimates from your fitted model. For time-series models with an auto-regressive component, the form of the predictions is simplified by expressing the later predictions in terms of earlier predictions. The later predictions are implicitly still functions of the observed data and the estimated parameters; they are just expressed in a simplified form through previous predictions.

For example, suppose you observe $y_1,...,y_T$ and you estimate parameters $\hat{\tau}$ for a model. Then if your model has an auto-regressive component, you make predictions $\hat{y}_{T+1} = f(y_1,...,y_T, \hat{\tau})$ and $\hat{y}_{T+2} = f(y_1,...,y_T, \hat{y}_{T+1}, \hat{\tau})$, where the later prediction is expressed as a function of the earlier prediction. The prediction $\hat{y}_{T+2}$ is still an implicit function of $y_1,...,y_T, \hat{\tau}$, so this is just a shorthand way of simplifying the expressed predictions, to take advantage of the auto-regression.

If you are doing this correctly, your uncertainty about your predictions (e.g., confidence intervals, etc.) should account of the uncertainty in earlier predictions, and so your uncertainty should tend to "balloon" as you get further and further from the observed data. You must make sure that the earlier predictions are not treated as new observed data - i.e., the prediction $\hat{y}_{T+1}$ is not the same as the actual data point $y_{T+1}$. So long as you treat this correctly, accounting for the additional uncertainty, there is no problem with expressing later predictions as being dependent on earlier predictions.