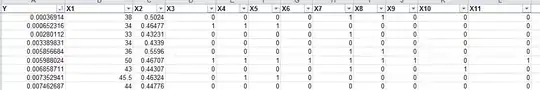

I have 2702 records with one target variable (Y) and 11 independent/predictor variables (X1-X11).

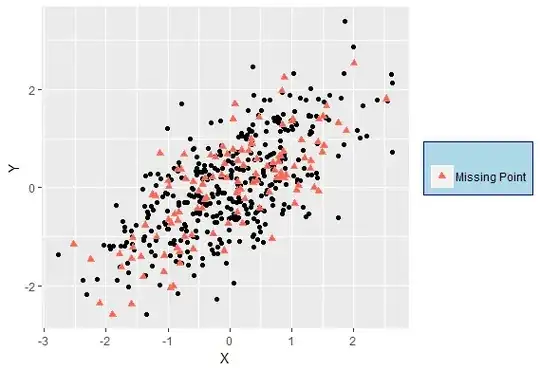

I am doing multivariable regression to understand if I can predict Y using X or if there is any correlation between Y and X's.

I am doing multivariable regression to understand if I can predict Y using X or if there is any correlation between Y and X's.

Here is the ANOVA result.

Conclusion 1: Since my R square is low,I do not have a good model. The independent variables I have are not doing a good job/ or are not enough to explain the variation in Y.

Conclusion 1: Since my R square is low,I do not have a good model. The independent variables I have are not doing a good job/ or are not enough to explain the variation in Y.

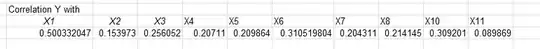

Confusion 1: Since the p value for individual variables are significant, can I conclude that there is a strong correlation between Y and X1,X2,X3,X7,X8,X10,X11??

Confusion 2: Even though the p value is significant, there is no difference in correlation between significant variables and non-significant variables.

So I am really confused how to interpret my result. I feel like saying " there is no relation between Y and X based on Conclusion 1 and Confusion 2 but again how do I interpret confusion 1.

Any help would be appreciated, and I also want to know what kind of material can I study to get to solve my lack of understanding in these kind of scenarios. I feel like i am missing a piece in my understanding of data science.