I am getting a strange output from sklearn's LogisticRegression, where my trained model classifies all observations as 1s.

In [1]:

logit = LogisticRegression(C=10e9, random_state=42)

model = logit.fit(X_train, y_train)

classes = model.predict(X_test)

probs = model.predict_proba(X_test)

print np.bincount(classes)

Out [1]:

[ 0 2458]

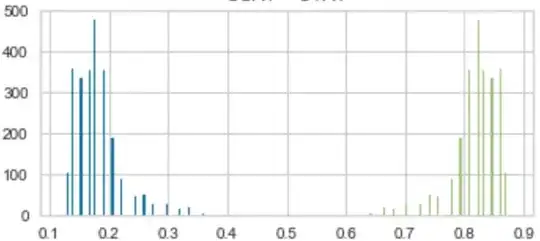

But look at the predicted probabilities:

How is this possible?

I know that there is another post on this (here), but it does not answer this question. I understand that my classes are not balanced (this uniform classification goes away when I enter the argument class_weights = balanced).

However, I want to understand why sklearn is classifying predicted probabilities of less than 0.5 as a positive event.

Thoughts?