Is the model still considered linear

To answer your question, recall the definition of a linear model.

Given a dataset composed of a vector $\mathbf{x} = \{ x_1, x_2,...,x_n\}$ of $n$ explanatory variables and one dependent variable $y$ we assume in this model that the relationship between $\mathbf{x}$ and $y$ is linear

$$ y = \beta_0 1 + \beta_1 x_1 + \beta_2 x_2 + ... + \beta_n x_n + \epsilon$$

Where $\beta_0$ is an intercept term and $\epsilon$ is the error variable, an unobserved random variable that adds "noise" to the linear relationship.

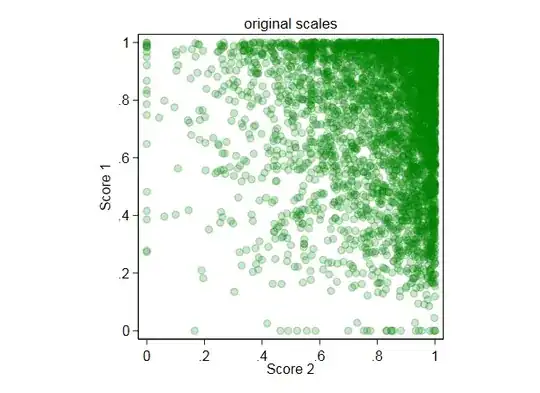

As @Roland points out, the linear relationship must hold in the parameters, not necessarily in the data. So nothing stops you from taking functions of the explanatory variables, and then performing the linear regression again. For example in your case, you could let:

$$ z_1 = x_1, \ z_2 = x_2, \ z_3 = x_1^2, \ z_4 = x_2^2$$

And then perform linear regression on $z$ as:

$$ y = \beta_0 1 + \beta_1 z_1 + \beta_2 z_2 + \beta_3 z_3 + \beta_4 z_4 + \epsilon $$

which is equivalent to

$$ y = \beta_0 1 + \beta_1 x_1 + \beta_2 x_2 + \beta_3 x_1^2 + \beta_4 x_2^2 + \epsilon$$

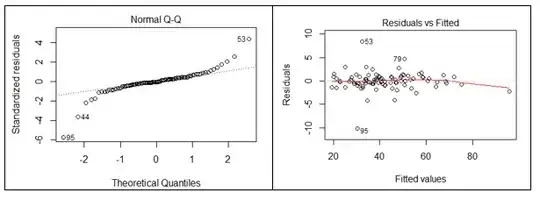

I clearly see an improvement in both assumptions

If your data shows signs of an underlying polynomial relationship between the explanatory variables, then fitting a linear regression model on polynomial variables will improve your model. As always, this comes with many advantages and disadvantages, some discussed in the posts linked below.

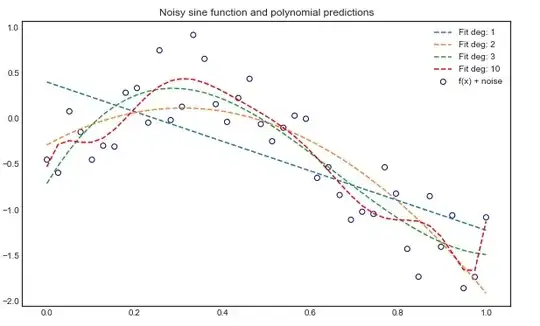

An example

Here is a toy example of trying to fit a linear regression model on a noisy sine curve. As you may know, the sine curve can be approximated by a sum of polynomials, so intuitively we would expect a polynomial linear regression model to do well under certain conditions:

More details

See these excellent posts for more details and explanations