Here is the data set I'm working with:

I'm trying to find the best possible multiple regression for R as dependent and the rest as independent variables.

Here's what I did in R:

> trainX <- as.matrix(spxdata[4:11])

> trainY <- spxdata[[3]]

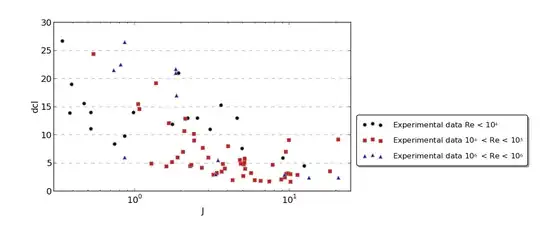

> CV = cv.glmnet(x = trainX, y = trainY, alpha = 1, nlambda = 100)

Warning message:

Option grouped=FALSE enforced in cv.glmnet, since < 3 observations per fold

> plot(CV)

> fit = glmnet(x = trainX, y = trainY, alpha = 1, lambda = CV$lambda.1se)

> fit$beta[,1]

RE VOL260 VOL360 PE PX FCFY GADY NDE

0 0 0 0 0 0 0 0

And here's the CV plot:

Why is there a warning message and why are all the fitted coefficients zero?