I already posted the same question on https://stackoverflow.com/questions/49298634/how-to-interpret-results-of-auto-arima-in-r but some one pointed to ask it on here. I would appreciate any relevant help.

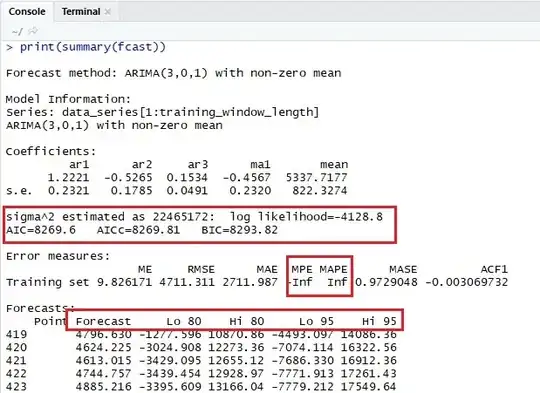

Let I have a time series X and I used fit<-auto.arima() followed by fcast <- forecast(fit, h=100). When I do print(summary(fcast)), I get a result having number of variables (snapshot of an example is attached).

- What is the meaning of each variable (specially, highlighted in red boxes)? If someone can explain in simple terms, it would be great.

- What is the meaning of getting

-InfandInfforMPEandMAPErespectively? - What is meaning of

Lo 80,Hi 80,Lo 95, andHi 95? Can I say that it is 80% likely to have actual value equal toForecast+Lo 80+Hi 80?