I am really don't know what I am missing. So, please help me.

I am doing a time series analysis. What I want to do is find the predicted values.

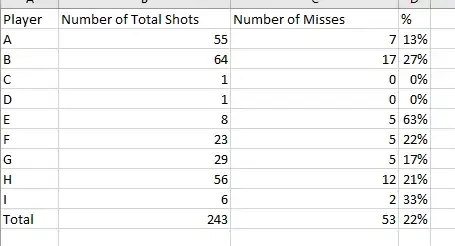

Here is my data.

848852 705558 829983 761070 826599 795067 840063 764453 885627 797778 781298 915712 810750 701044

I know this is a really small sample to doing time series analysis. But I tried.

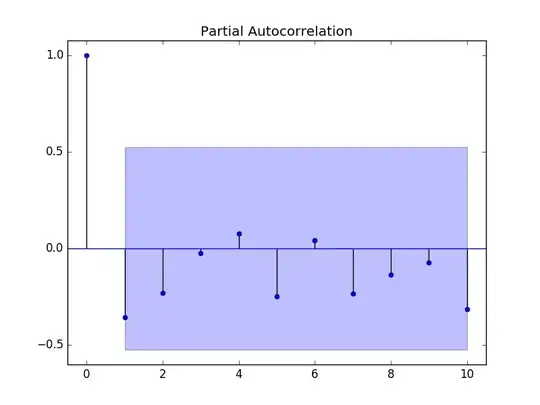

I did it unit root test. Through that, I found my data is stationary data.

And I couldn't find seasonal after checking the plot.

Actually, I don't know how to find a seasonal unit root using r. I just guess checking the graph. So, if you know how to find the seasonal unit root, let me know. I would really appreciate it.

Anyway, I got the predicted values using the Arima model. And the values I get from the Arima model is following.

852427.0 785296.3 815065.3 801864.3 807718.3 805122.3 806273.5 805763.0

805989.4 805889.0 805933.5 805913.8 805922.5 805918.6 805920.4 805919.6

805919.9 805919.8 805919.9 805919.8 805919.8 805919.8 805919.8 805919.8

As you can see, from the 20 value, the values are the same. Why does this problem happen?

What should I do to solve this problem??

To sum up, Here is my question.

- Q1. What is the minimum sample size to do a time series analysis?

- Q2. To find the seasonal unit root, how can I do it in R?

- Q3. Why do I get the same predicted values? To solve this problem, what should I do?

P.S. Here is my R code.

a1 <- scan()

848852 705558 829983 761070 826599 795067 840063 764453 885627 797778 781298 915712 810750 701044

library(tseries)

library(astsa)

library(forecast)

plot.ts(a1)

adf.test(a1)

acf2(a1)

result = matrix(NA, 324, 2)

air <- 0

num <- 1

for(i in 0:5) {

for(j in 0:5) {

tryCatch({

ari <- Arima(a1, c(i, 0, j))

}, error = function(e){})

result[num, 1] <- ari$aic

result[num, 2] <- ari$aicc

num <- num+1

}

}

which.min(result[,1])

where <- data.frame(rep(0:5, each=6), rep(0:5))

where[2,] # the result is (0,1)

ari1 <- Arima(a1, c(0, 0, 1))

pre <- predict(ari1, n.ahead = 12*2)

pre$pred