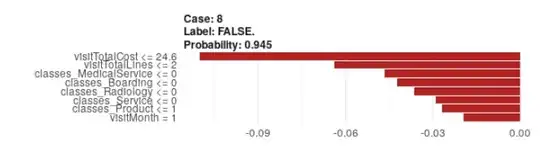

I am working in R creating a GBM model using H2O and trying to use LIME to look at some local explanations to get a feel for what the model is doing. It's a binary classifier and I'm specifying 8 for n_features to the LIME package. However, I keep running into situations where all or most the 8 features are showing as contradicting the highest probability class. The funny thing is the predicted probability of the class is in the 90's.

How would one interpret this? Is there a problem in the LIME package implementation?