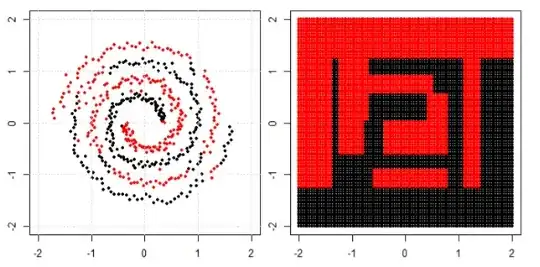

Assume that there is an artifical dataset that allows perfect (linear) seperation in good and bad clients. Why is it that a method such as xgboost is not able to identify the perfect decision boundary?

On the left there is the sample data that consists of ~10,000 data points that can be seperated trivially in good and bad. The plot on the right shows the forecasts of the xgboost model. Why can the model not identify the diagnoal as a perfect linear seperator?

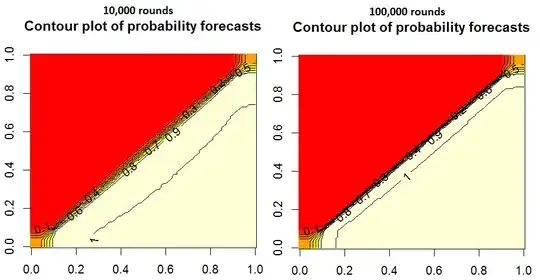

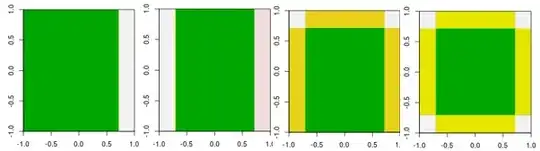

Edit: After the comments I ran the code with nrounds 10,000 and 100,000. This is the result:

rm(list = ls())

library(tidyverse)

library(xgboost)

#Generate input data

x <- y <- seq(0.01,0.99,0.01)

inputtable_xgb <- expand.grid(x = x,y = y) %>%

mutate(target = ifelse(y<x,1,0),`Target Label` = ifelse(y<x,"Good","Bad"))

#xgboost

set.seed(1)

dtrain <- xgb.DMatrix(data.matrix(inputtable_xgb[,c("x","y")]), label=inputtable_xgb[,"target"],missing = -999)

param <- list(objective = "binary:logistic", min_child_weight = 15,eta= .05,max_depth= 10,subsample= 0.75,colsample_bytree= 0.75,eval_metric= "auc")

clf <- xgb.train(params= param, data= dtrain, nrounds= 100,verbose= 1,maximize= FALSE, nthread = 4)

#fill a matrix with forecast values

stepsize <- 101

contour_xgb <- matrix(0,nrow = stepsize,ncol = stepsize)

values <- seq(0,1,by = 0.01)

for (i in 1:(stepsize)){

for (j in 1:(stepsize)){

example_data <- data.frame( x = values[i],y = values[j] )

dtest <- xgb.DMatrix(data.matrix(example_data),missing = -999)

contour_xgb[i,j] <- predict(clf,dtest, ntreelimit = clf$bestInd)

}

}

#generate plot for the input data and the model forecasts

inputtable_xgb %>%

ggplot(aes(x = x,y = y,color = `Target Label`)) +

geom_point() +

labs(title = "Artificial data of good and bad clients")

image(contour_xgb,main = "Contour plot of probability forecasts", xlab = "x", ylab = "y")

contour(contour_xgb, add = TRUE,labcex= 1)