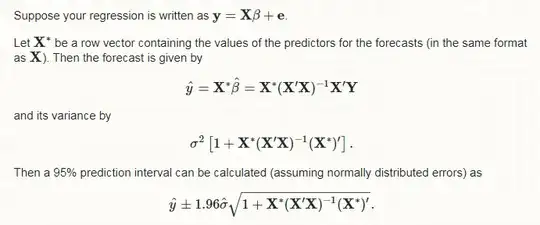

I've always found a mystery, what seems a first glance a pretty simple equation, I know this is an old post (2017 Edited, 2020 New Comment), but I'd like to add my take to explaining the justification for the equations for the LM variance.

Explanation below:

Let the linear model of the type be $Y = X\beta + \epsilon_{model}$, where the OLS minimization for the weights can be obtained as:

$$\hat{\beta\;} = (X^{T}X)^{-1}X^{T}Y$$

Thus the fitted values for the model could be obtained as:

$$Y_{\text{fit}} = X\hat{\beta\;} = X(X^{T}X)^{-1}X^{T}Y$$

Therefore the explained variable $Y = Y_{\text{fit}} + \epsilon$ and the variance of the variable will be:

$$\text{Var}(Y) = \text{Var}(Y_{\text{fit}} + \epsilon)$$

If we assume the fitted values mustn't have any correlation with the errors (They are unexplained variance), then the following result applies $\text{Covar}(Y_{\text{fit}},\epsilon) = 0$, therefore:

$$\text{Var}(Y) = \text{Var}(Y_{\text{fit}} + \epsilon) = \text{Var}(Y_{\text{fit}}) + \text{Var}(\epsilon)$$

And given the "fitted values", aren't a random variable, their variance will be zero $\text{Var}(Y_{\text{fit}}) = 0$, thus:

$$\text{Var}(Y) = \text{Var}(\epsilon) = \sigma_{res}^{2}I$$

Assuming there is uncertainty in the predicted coefficients, what would be the uncertainty in the prediction? (again assuming no correlation between predictions and errors).

$$\text{Var}(y_{pred}) = \text{Var}(\hat{y} + \epsilon) = \text{Var}(\hat{y}) + \text{Var}(\epsilon)$$

From previous deduction we know $\text{Var}(\epsilon) = \sigma_{res}^{2}I$, so it only remains to assess:

$$\text{Var}(\hat{y}) = E[\hat{y}\,\hat{y}^{T}] - E[\hat{y}]E[\hat{y}^{T}]$$

We've defined $$\hat{y} = X\hat{\beta\;}$$

Therefore,

\begin{align}

\text{Var}(\hat{y}) &= E[X\hat{\beta\;}\hat{\beta\;}^{T}X^{T}] - E[X\hat{\beta\;}]E[\hat{\beta\;}^{T}X^{T}]\\

& = X\,E[\hat{\beta\;}\hat{\beta\;}^{T}]\,X^{T} - X\,E[\hat{\beta\;}]E[\hat{\beta\;}^{T}]\,X^{T}\\

& = X\,(E[\hat{\beta\;}\hat{\beta\;}^{T}] - E[\hat{\beta\;}]E[\hat{\beta\;}^{T}])\,X^{T}\\

& = X\,\text{Var}(\hat{\beta\;})\,X^{T}\\

\\

\text{Var}(\hat{\beta\;}) &= E[\hat{\beta\;}\hat{\beta\;}^{T}] - E[\hat{\beta\;}]E[\hat{\beta\;}^{T}]\\

&=E[(X^{T}\,X)^{-1}\,X^{T}\,Y\,Y^{T}\,X\,(X\,X^{T})^{-1}] - E[(X^{T}\,X)^{-1}\,X^{T}\,Y]E[Y^{T}\,X\,(X\,X^{T})^{-1}]\\

&=(X^{T}\,X)^{-1}\,X^{T}\,(E[Y\,Y^{T}] - E[Y]E[Y^{T}])\,X\,(X\,X^{T})^{-1}\\

&=(X^{T}\,X)^{-1}\,X^{T}\,\text{Var}(Y)\,X\,(X\,X^{T})^{-1}\\

&=(X^{T}\,X)^{-1}\,X^{T}\, \sigma_{res}^{2}I\,X\,(X\,X^{T})^{-1}\\

&=\sigma_{res}^{2}(X^{T}\,X)^{-1}\,X^{T}\,X\,(X\,X^{T})^{-1}\\

&=\sigma_{res}^{2}\,(X\,X^{T})^{-1}\\

\\

\text{Var}(\hat{y}) & = X\,\text{Var}(\hat{\beta\;})\,X^{T}\\

& = X\,\sigma_{res}^{2}\,(X\,X^{T})^{-1}\,X^{T}\\

& = \sigma_{res}^{2}X\,(X\,X^{T})^{-1}\,X^{T}\\

\end{align}

Consequently:

\begin{align}

\text{Var}(y_{pred}) &= \text{Var}(\hat{y}) + \text{Var}(\epsilon)\\

&= \sigma_{res}^{2}X\,(X\,X^{T})^{-1}\,X^{T} + \sigma_{res}^{2}I\\

&= \sigma_{res}^{2}(X\,(X\,X^{T})^{-1}\,X^{T} + I)

\end{align}

And for future predicted values:

$$\text{Var}(y_{fcast}) = \sigma_{res}^{2}(X^{*}\,(X\,X^{T})^{-1}\,X^{*T} + I)$$

Supporting Results:

\begin{align}

\text{Var}(X) &= E[XX^{T}] - E[X]^{2}\\

\text{Covar}(X,Y) &= E[XY^{T}] - E[X]E[Y]\\

\text{Var}(X \pm Y)&= \text{Var}(X) +\text{Var}(Y) \pm2\text{Covar}(X,Y)\\

\text{Corr}(X,Y) &= \dfrac{\text{Covar}(X,Y)}{\sigma_{X}\sigma_{Y}}

\end{align}

\begin{align}

\text{Var}(X+Y) &= E[((X+Y) - E[(X+Y)])^{2}]\\

&= E[((X+Y) - E[(X+Y)]) ((X+Y) - E[(X+Y)])^{T}]\\

&= E[((X+Y) - E[X]-E[Y])((X+Y)^{T} - E[X]^{T}-E[Y]^{T})]\\

&= E[(X +Y -\bar{X} -\bar{Y})(X^{T}+Y^{T} - \bar{X}^{T}-\bar{Y}^{T})]\\

&= E[XX^{T}+XY^{T} - X\bar{X}^{T}-X\bar{Y}^{T} + YX^{T}+YY^{T} - Y\bar{X}^{T}-Y\bar{Y}^{T} \\

& -\bar{X}X^{T}-\bar{X}Y^{T} +\bar{X}\bar{X}^{T} +\bar{X}\bar{Y}^{T}-\bar{Y}X^{T}-\bar{Y}Y^{T} +\bar{Y}\bar{X}^{T} +\bar{Y}\bar{Y}^{T}]\\

&= E[XX^{T}]+E[XY^{T}] - \bar{X}^{2}-\bar{X}\bar{Y} + E[YX^{T}]+E[YY^{T}] - \bar{Y}\bar{X}-\bar{Y}^{2} \\

& -\bar{X}^{2}-\bar{X}\bar{Y} +\bar{X}^{2} +\bar{X}\bar{Y}-\bar{Y}\bar{X}-\bar{Y}^{2} +\bar{Y}\bar{X} +\bar{Y}^{2}\\

&= E[XX^{T}] - \bar{X}^{2} +E[YY^{T}]-\bar{Y}^{2} +2(E[XY^{T}] -\bar{X}\bar{Y})\\

&= \text{Var}(X) +\text{Var}(Y) +2\text{Covar}(X,Y)

\end{align}

Supporting Algebra results:

$$X\,A\,X^{T} \pm X\,B\,X^{T} = X\,(A\pm B)\,X^{T} $$