I am trying to build a basic content-based recommender engine based on movie genres, the data set is from MovieLens. Some of the movies have more than 2 genres.

part of my code:

head(genre_matrix2,15)

Action Adventure Animation Children Comedy

1 0 1 1 1 1

2 0 1 0 1 0

3 0 0 0 0 1

4 0 0 0 0 1

5 0 0 0 0 1

6 1 0 0 0 0

7 0 0 0 0 1

8 0 1 0 1 0

9 1 0 0 0 0

10 1 1 0 0 0

11 0 0 0 0 1

12 0 0 0 0 1

13 0 1 1 1 0

14 0 0 0 0 0

For example, the first movie belongs to Adventure, Animation, Children and Comedy genres.

Because there are total 18 genres, and maybe some movies have same genres matched. I want to do the cluster analysis, make it in a more precise and small categories. For example, if 3 movies all have Comedy, Action and Romance genres, they are more likely to be in the same categories.

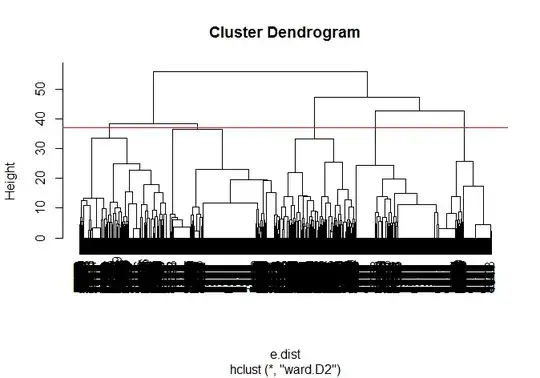

I used hierarchical cluster analysis:

e.dist <- dist(genre_matrix2, method="euclidean")

h.E.cluster <- hclust(e.dist)

plot(h.E.cluster)

h.cluster <- hclust(e.dist, method="ward.D2")

plot(h.cluster)

cut.h.cluster <- cutree(h.cluster, k=5)

table(cut.h.cluster)

1 2 3 4 5

1675 1159 1844 2001 2446

Then I use the Elbow Method to see if this is Optimal number of clusters:

fviz_nbclust(genre_matrix2,

FUNcluster = hcut,

method = "wss",

k.max = 18

) +

labs(title="Elbow Method for HC") +

geom_vline(xintercept = 5,

linetype = 2)

There's no elbow in this, does it mean that I souldn't do cluster analysis of this? Any suggestion is welcomed!