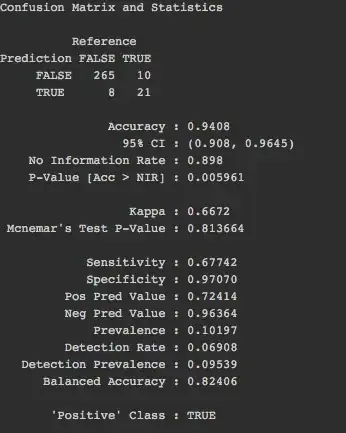

I get to your main question in the second paragraph, first a more general remark: You probably already know that you should not be optimizing accuracy with a data-set that has this level of class imbalance because there is probably also classification cost imbalance. (You imply that there is when you say you are mainly interested in the true class. If there isn't, then the problem is trivial and you can in your case have almost 90% accuracy without even constructing a classifier.) Most likely, a true record predicted as false is a bigger problem than the other way around. It is more important to identify the few true records and it can be tolerated to identify some more false records as true to get there. As you said, tree based algorithms provide per predicted record a probability that it belongs to the class true which you call $p$. You are not forced to put the cutoff at $p=0.5$, you can put it lower to make sure you don't miss the few true records. The relative misclassification costs between false positives and false negatives, as determined by your application scenario, can tell you where to put the cutoff.

To understand what this number $p$ means and what can be done with it, we need to look at the tree classifier. All your training set records have started at the tree's root, branched multiple times according to rules that the algorithm finds in the data and end up in a leaf. The goal is to have relatively pure leaves, each leaf being dominated by records of one class. (You could have perfectly pure leaves if you just keep branching, but you would be overfitting on your training set.) $p$ is the proportion of records in that leaf that are of the class you are trying to identify. This number loosely follows a frequentist interpretation of probabilities: If the record is part of this leaf, it has x out of y chances of being that class.

A forest is just a collection of trees that have been randomized (the variables to branch on are chosen randomly rather than to optimize gains in purity) and left unpruned (you branch till all leaves are pure). Each record is rooted through all the trees in the forest. $p$ is computed slightly differently here: It denotes the proportion of trees in the forest where this record ends up in a leaf dominated by this class. The probability within each tree would be binary since the leaves are pure in the absence of pruning. It only makes sense to compute $p$ on forest level.

Knowing how those values of $p$ are derived, I don't see how it would be possible to construct confidence intervals around every single one of them. Even if it was possible, it wouldn't be very practical, you would have one CI per record in your production set.

I also don't think you can or should make the kind of pronouncements you want to make on a data-set level ("With 95% probability, 20-25% of items in the production set are TRUE, 75-80% are FALSE"). Because of your class imbalance, you don't want to find out how many records exactly are true (according to a 0.5 cutoff), you want to bias that number upwards (by lowering the cutoff) so that you don't miss the true records. What you should do is determine your misclassification costs, put them in a loss function and then optimize that.

Also, once that you have chosen your model based on test set performance, you can retrain that same model (same number of trees in the forest same type of randomization etc.) with all the labeled data you have available. It was good to set a test set aside in order to choose a model. Once that is done, you want to use all the information you have to train a model which you will use for your unlabeled production set.