I'm working on a project from Udacity's ml nd, finding donors,

I'm making the initial test using three algorithms:

LogisticRegression -> RED

GaussianNB -> Green

AdaBoostClassifier -> Blue

This is the result I'm getting:

I wonder why nb has such a poor performance. This is some informations regarding the dataset:

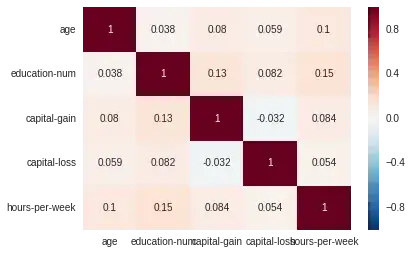

1) Initial numerical features are not highly correlated:

2) There are categorical features on that were encoded increasing the number of features up to 100 and making the dataset more sparse.

Edit:

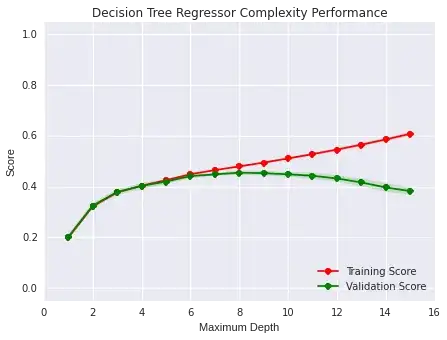

I also tried using decision Trees,these has a poor performace too, best case is around 0.45 for depth 8 over that it starts presenting a high variance, which can explain why Adaboost works well on this model since it's main advantage is it's capacity for improving the variance issue.

I still have the doubt of why NB and DT have such a poor performance on this dataset compared with the logistic regression which is a simple model too.

EDIT:

This is the code I have used

from sklearn.linear_model import LogisticRegression

# Import the three supervised learning models from sklearn

from sklearn.naive_bayes import GaussianNB

from sklearn.tree import DecisionTreeClassifier

from sklearn.svm import SVC

from sklearn.ensemble import AdaBoostClassifier

# Initialize the three models

clf_A = LogisticRegression(penalty='l2')

clf_B = GaussianNB()

clf_C = AdaBoostClassifier()

# Calculate the number of samples for 1%, 10%, and 100% of the training data

def get_sample_size(percentage):

return int((float(percentage)/100)*X_train.shape[0])

samples_1 = get_sample_size(1.0)

samples_10 = get_sample_size(10.0)

samples_100 = get_sample_size(100.0)

# Collect results on the learners

results = {}

for clf in [clf_A, clf_B, clf_C]:

clf_name = clf.__class__.__name__

results[clf_name] = {}

for i, samples in enumerate([samples_1, samples_10, samples_100]):

results[clf_name][i] = \

train_predict(clf, samples, X_train, y_train, X_test, y_test)

# Run metrics visualization for the three supervised learning models chosen

vs.evaluate(results, accuracy, fscore)

def train_predict(learner, sample_size, X_train, y_train, X_test, y_test):

'''

inputs:

- learner: the learning algorithm to be trained and predicted on

- sample_size: the size of samples (number) to be drawn from training set

- X_train: features training set

- y_train: income training set

- X_test: features testing set

- y_test: income testing set

'''

results = {}

# TODO: Fit the learner to the training data using slicing with 'sample_size' using .fit(training_features[:], training_labels[:])

start = time() # Get start time

learner.fit(X_train[:sample_size], y_train[:sample_size])

end = time() # Get end time

# TODO: Calculate the training time

results['train_time'] = end - start

# TODO: Get the predictions on the test set(X_test),

# then get predictions on the first 300 training samples(X_train) using .predict()

start = time() # Get start time

predictions_test = learner.predict(X_test)

predictions_train = learner.predict(X_train[0:300])

end = time() # Get end time

# TODO: Calculate the total prediction time

results['pred_time'] = end - start

# TODO: Compute accuracy on the first 300 training samples which is y_train[:300]

results['acc_train'] = accuracy_score(y_train[0:300],predictions_train)

# TODO: Compute accuracy on test set using accuracy_score()

results['acc_test'] = accuracy_score(y_test,predictions_test)

# TODO: Compute F-score on the the first 300 training samples using fbeta_score()

results['f_train'] = fbeta_score(y_test,predictions_test,0.5)

# TODO: Compute F-score on the test set which is y_test

results['f_test'] = fbeta_score(y_test,predictions_test,0.5)

# Success

print "{} trained on {} samples.".format(learner.__class__.__name__, sample_size)

# Return the results

return results

The dataset is available here: