A naive Bayes classifier is a simple probabilistic classifier based on applying Bayes' theorem with strong independence assumptions. A more descriptive term for the underlying probability model would be "independent feature model".

Questions about using, optimizing, or interpreting a naive Bayes classifier should use this tag.

A naive Bayes classifier is a simple probabilistic classifier based on applying Bayes' theorem with strong independence assumptions. A more descriptive term for the underlying probability model would be "independent feature model".

In simple terms, a naive Bayes classifier assumes that the presence or absence of a particular feature is unrelated to the presence or absence of any other feature, given the class variable. For example, a fruit may be considered to be an apple if it is red, round, and about 3" in diameter. A naive Bayes classifier considers each of these features to contribute independently to the probability that this fruit is an apple, regardless of the presence or absence of the other features.

For some types of probability models, naive Bayes classifiers can be trained very efficiently in a supervised learning setting. In many practical applications, parameter estimation for naive Bayes models uses the method of maximum likelihood; in other words, one can work with the naive Bayes model without accepting Bayesian probability or using any Bayesian methods.

Despite their naive design and apparently oversimplified assumptions, naive Bayes classifiers have worked quite well in many complex real-world situations. In 2004, an analysis of the Bayesian classification problem showed that there are sound theoretical reasons for the apparently implausible efficacy of naive Bayes classifiers [(Zhang, 2004)]. Still, a comprehensive comparison with other classification algorithms in 2006 showed that Bayes classification is outperformed by other approaches, such as boosted trees or random forests [(Caruana & Niculescu–Mizil, 2006)].

An advantage of naive Bayes is that it only requires a small amount of training data to estimate the parameters (means and variances of the variables) necessary for classification. Because independent variables are assumed, only the variances of the variables for each class need to be determined and not the entire covariance matrix.

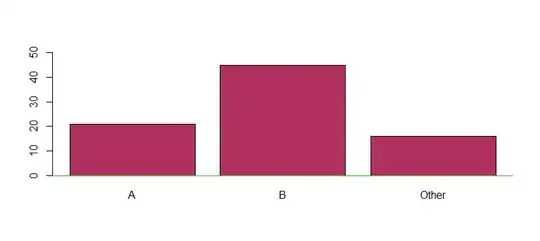

We can visualize a naive Bayes graphically as follows:

In this Bayesian network, predictive attributes $X_i$ are conditionally independent given the class $C$.

References:

Caruana, R., & Niculescu–Mizil, A. (2006). An empirical comparison of supervised learning algorithms. Proceedings of the 23rd International Conference on Machine Learning, 161–168. Available online, URL: http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.122.5901.

Domingos, P. & Pazzani, M. (1997). On the optimality of the simple Bayesian classifier under zero-one loss. Machine Learning, 29, 103–137.

Metsis, V., Androutsopoulos, I., & Paliouras, G. (2006). Spam filtering with naive Bayes—which naive Bayes? Third Conference on Email and Anti-Spam (CEAS), 17.

Rennie, J., Shih, L., Teevan, J., & Karger, D. (2003). Tackling the poor assumptions of naive Bayes classifiers. Proceedings of the Twentieth International Conference on Machine Learning. Available online, URL: http://people.csail.mit.edu/jrennie/papers/icml03-nb.pdf.

Rish, I. (2001). An empirical study of the naive Bayes classifier. IJCAI 2001 Workshop on Empirical Methods in Artificial Intelligence. Available online, URL: http://www.research.ibm.com/people/r/rish/papers/RC22230.pdf.

Zhang, H. (2004). The optimality of naive Bayes. FLAIRS2004 conference. American Association for Artificial Intelligence. Available online, URL: http://www.cs.unb.ca/profs/hzhang/publications/FLAIRS04ZhangH.pdf.