I am a newbie in the Machine Learning world, I completed the course (very good by the way) of Andrew Ng on Coursera. This question is very software-independent. I would like to know, when you draw a learning curve, do you represent the training error and CV error (using the metric that we want like rmse or $R^2$ for linear regression) as a function of the training set size? Or do you represent instead training error and test error as a function of the training set size? I have seen lot of people plotting the learning curve for the test error, whereas in the course of Andrew Ng I have seen the learning curve for the CV error.

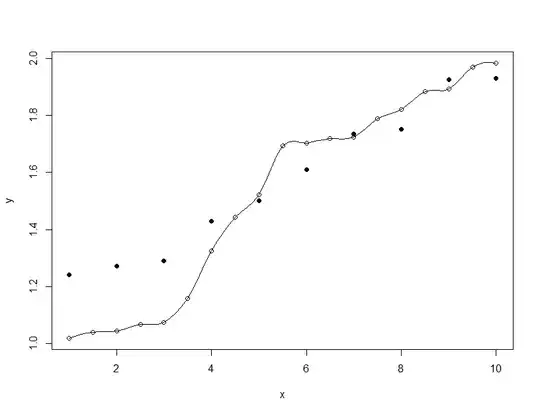

I attach as an example some curve that I got few months ago using Python.

Thanks a lot for the clarification, best regards