If you're willing to assign a prior Beta distribution on $p_1$ and $p_2$, you could do a Bayesian analysis. The model would be

\begin{align}

p_1&\sim \operatorname{Beta}(\alpha_1,\beta_1) \\

p_2&\sim \operatorname{Beta}(\alpha_2,\beta_2) \\

y_1&\sim \operatorname{Bin}(p_1,n_1) \\

y_2&\sim \operatorname{Bin}(p_2,n_2)

\end{align}

Where $\alpha$ and $\beta$ are your hyperparameters for $p_1$ and $p_2$, respectively. Here is the example done in R and JAGS. This code can easily be extended to include different $n$. A solution based on conjugacy is presented below.

library(rjags)

library(R2jags)

library(runjags)

library(hdrcde)

## The model

cat("

model{

for(i in 1:N) {

y1[i] ~ dbin(p1, n1)

y2[i] ~ dbin(p2, n2)

}

p1 ~ dbeta(500, 500)

p2 ~ dbeta(300, 500)

delta <- p1 - p2

}

", file="jags_model_beta.txt")

## The data

set.seed(0)

n1 = 100 ; n2 = 100 ; p1 = 0.65 ; p2 = 0.5 ; max.score = 15

y = as.vector(unlist(mapply(FUN = rbinom, n = c(n1, n2), size = c(max.score, max.score), prob = c(p1, p2))))

groups = factor( rep(1:2, times = c(n1, n2)) )

data.list <- list(

y1 = y[groups == 1]

, y2 = y[groups == 2]

, n1 = max.score

, n2 = max.score

, N = n1

)

## Parameters to store

params <- c(

"p1"

, "p2"

, "delta"

)

## MCMC settings

niter <- 10000 # number of iterations

nburn <- 2000 # number of iterations to discard (the burn-in-period)

nchains <- 5 # number of chains

## Run JAGS

out <- jags(

data = data.list

, parameters.to.save = params

, model.file = "jags_model_beta.txt"

, n.chains = nchains

, n.iter = niter

, n.burnin = nburn

, n.thin = 5

# , inits = jags.inits

, progress.bar = "text")

## Display the results

print(out, 3)

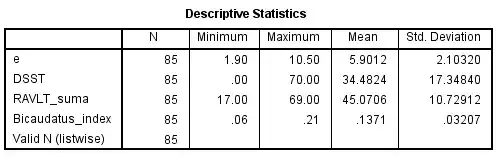

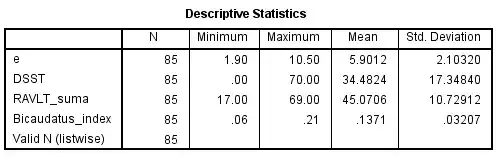

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

delta 0.121 0.014 0.093 0.112 0.121 0.131 0.149 1.001 4700

p1 0.584 0.010 0.565 0.578 0.584 0.591 0.604 1.001 8000

p2 0.463 0.010 0.443 0.456 0.463 0.470 0.484 1.001 5200

The delta is the difference between the proportions. The 95% credible interval for the difference is $(0.09; 0.15)$. This does not include $0$ so we have evidence that there is a true difference between proportions. We can also plot the posterior of delta:

jagsfit.mcmc <- as.mcmc(out)

jagsfit.mcmc <- combine.mcmc(jagsfit.mcmc)

hdr.den(as.vector(jagsfit.mcmc[, "delta"]), prob = c(95))

Solution based on conjugacy

As I explain here, the posterior of $p_1$ and $p_2$ are also Beta distributions if the priors are Beta distributions. Specifically, the posterior distributions are:

\begin{align}

p_{1}^{\star}&\sim \operatorname{Beta}(\alpha_1 + \sum_{i=1}^{n}x_{1,i},\beta_1 + \sum_{i=1}^{n}N_{1,i} - \sum_{i=1}^{n}x_{1,i}) \\

p_2^{\star}&\sim \operatorname{Beta}(\alpha_2 + \sum_{i=1}^{n}x_{2,i},\beta_2 + \sum_{i=1}^{n}N_{2,i} - \sum_{i=1}^{n}x_{2,i})

\end{align}

Where $x_{1,i}$ and $x_{2,i}$ denote the number of successes for group 1 and 2. $N_{1,i}$ and $N_{2,i}$ denote the sample sizes for group 1 and 2. The solution in R is:

alpha1 <- 500

beta1 <- 500

alpha2 <- 300

beta2 <- 500

post_p1 <- rbeta(1e6, alpha1 + sum(y[groups == 1]), beta1 + n1*15 - sum(y[groups == 1]))

post_p2 <- rbeta(1e6, alpha2 + sum(y[groups == 2]), beta2 + n2*15 - sum(y[groups == 2]))

delta <- post_p1 - post_p2

hist(delta, las = 1, col = "slategrey", freq = FALSE)

(credval <- quantile(delta, c(0.025, 0.975)))

abline(v = credval, col = "red", lwd = 2)

The 95% credible interval is nearly identical with the one produced with JAGS.