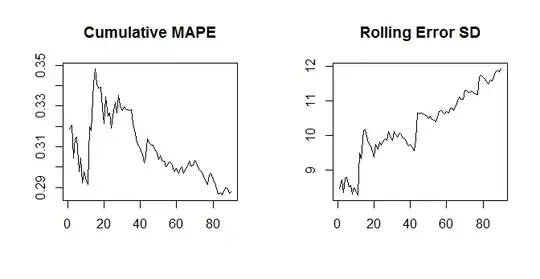

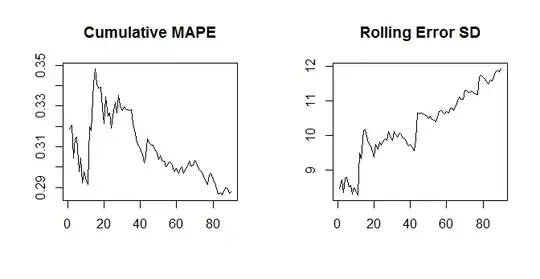

Note that the MAPE goes down as actuals go up - and the standard deviation doesn't. So for a given time series of errors (with potentially increasing SD), we could simply have a time series of actuals with a positive trend, and once the positive trend is strong enough, the MAPE will start going down.

set.seed(10)

nn <- 100

error <- rnorm(nn,0,seq(10,15,length.out=nn))

actuals <- seq(20,50,length.out=nn)

cumulative.mape <- cumsum(abs(error)/actuals)/(1:nn)

cumulative.sd <- sapply(1:nn,function(xx)sd(error[1:xx]))

opar <- par(mfrow=c(1,2))

plot(cumulative.mape[-(1:10)],type="l",main="Cumulative MAPE",ylab="",xlab="")

plot(cumulative.sd[-(1:10)],type="l",main="Rolling Error SD",ylab="",xlab="")

So the issue is that the MAPE depends on the error and the actuals, whereas the SD of the errors don't depend on the actuals any more (beyond the actuals influencing the errors themselves, of course). Thus, this should typically not happen for the SD and MAE, since the MAE again only depends on the errors, not the actuals.

EDIT: In general, different error measures move somewhat in tandem - but not perfectly so. Minimizing different error types is the same as optimizing different loss functions - and the minimizer for one loss function is typically not the minimizer of a different loss function.

For an extreme example, minimizing the MAE will pull you towards the median of the future distribution, while minimizing the MSE will pull you towards its expectation. If the future distribution is asymmetric, these will be different, so minimizing the MAE will yield biased predictions. I just discussed this yesterday.

So: no, minimizing one error measure will not necessarily minimize a different one.

I regularly read the International Journal of Forecasting, and accepted best practice there is to report multiple error measures, and sometimes, yes, they imply that different methods are "best". Which authors and readers take in stride. I'd say that point forecasts are not overly helpful, anyway, and that you should always aim at full predictive densities.

(Incidentally, I can't recall ever having seen the SD of the errors reported in the IJF, and I don't really see the point of it as an error measure. An error time series can be badly biased and constant over time, with a zero SD - what's good about that?)

EDIT 2: I no longer believe assessing point forecasts using different error measures is useful. To the contrary, I believe it's actively misleading. My argument can be found in Kolassa (2020), "Why the "best" point forecast depends on the error or accuracy measure", International Journal of Forecasting.