I have two random variables which are independent and identically distributed, i.e. $\epsilon_{1}, \epsilon_{0} \overset{\text{iid}}{\sim} \text{Gumbel}(\mu,\beta)$:

$$F(\epsilon) = \exp(-\exp(-\frac{\epsilon-\mu}{\beta})),$$

$$f(\epsilon) = \dfrac{1}{\beta}\exp(-\left(\frac{\epsilon-\mu}{\beta}+\exp(-\frac{\epsilon-\mu}{\beta})\right)).$$

I am trying to calculate two quantities:

- $$\mathbb{E}_{\epsilon_{1}}\mathbb{E}_{\epsilon_{0}|\epsilon_{1}}\left[c+\epsilon_{1}|c+\epsilon_{1}>\epsilon_{0}\right]$$

- $$\mathbb{E}_{\epsilon_{1}}\mathbb{E}_{\epsilon_{0}|\epsilon_{1}}\left[\epsilon_{0}|c+\epsilon_{1}<\epsilon_{0}\right]$$

I get to a point where I need to do integration on something of the form: $e^{e^{x}}$, which seems to not have an integral in closed form. Can anyone help me out with this? Maybe I have done something wrong.

I feel there definitely should be closed form solution. (EDIT: Even if it is not closed form, but there would be software to quickly evaluate the integral [such as Ei(x)], that would be ok I suppose.)

EDIT:

I think with a change of variables, let

$$y =\exp(-\frac{\epsilon_{1}-\mu}{\beta})$$ and

$$\mu-\beta\ln y =\epsilon_{1}$$

This maps to $[0,\;\infty)$ and $\left[0,\;\exp(-\frac{\epsilon_{0}-c-\mu}{\beta})\right] $ respectively.

$|J|=|\dfrac{d\epsilon}{dy}|=\frac{\beta}{y}$. Then under the change of variable, I have boiled (1) down to...

$$\int_{0}^{\infty}\dfrac{1}{1-e^{-x}}\left(\int_{\mu-\beta\ln x-c}^{\infty}\left[c+\mu-\beta\ln y\right]e^{-y}dy\right)e^{-x}dx$$

There might be an algebra mistake but I still cannot solve this integral...

RELATED QUESTION: Expectation of the Maximum of iid Gumbel Variables

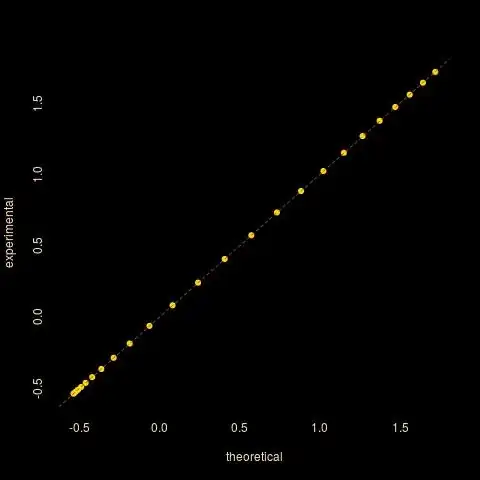

$c$ varies from -2 to 2, with logarithmic axes, based on 10⁵ simulations" />

$c$ varies from -2 to 2, with logarithmic axes, based on 10⁵ simulations" />