There is an explicit density for $S_n$ when $\sigma=1/2$ and a limiting density of

$$\frac{e^{-\frac{z}{2}-\frac{e^{-z}}{2}}}{\sqrt{2 \pi }}$$

(And there might be a general density for other values of $\sigma$.) I have to confess ignorance of what extreme value distribution has the above density. It's similar to a Gumbel distribution not quite.

This is done with a brute force approach where the pdf of $\sum_{i=1}^n Y_i$ is found followed by the density of $S_n=\sum_{i=1}^n Y_i - \log(n)$ (again, just for $\sigma=1/2$). Then the limit of the density of $S_n$ is found along with the limiting moment generating function.

First, the distribution of $Y_i$ (using Mathematica throughout).

pdf[j_, y_] := Simplify[PDF[TransformedDistribution[-Log[1 - x],

x \[Distributed] BetaDistribution[1 - 1/2, j /2]], y] //

TrigToExp, Assumptions -> y > 0]

So the pdf for $Y_1$ and $Y_2$ are

pdf[1, y1]

$$\frac{1}{\pi \sqrt{e^{y_1}-1}}$$

pdf[2, y2]

$$\frac{e^{-\frac{y_2}{2}}}{2 \sqrt{e^{y_2}-1}}$$

The distribution of the sums of the $Y$'s are constructed sequentially:

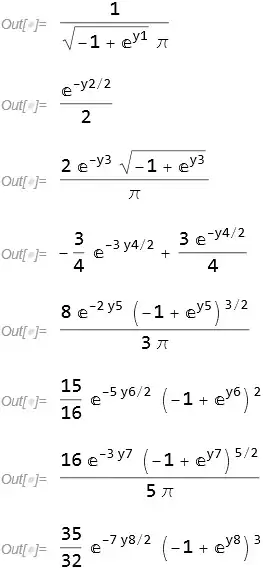

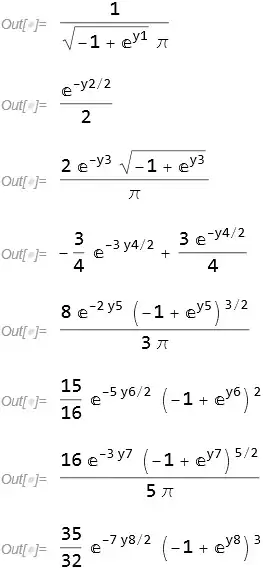

pdfSum[1] = pdf[1, y1]

pdfSum[2] = Integrate[pdfSum[1] pdf[2, y2 - y1], {y1, 0, y2}, Assumptions -> y2 > 0]

pdfSum[3] = Integrate[pdfSum[2] pdf[3, y3 - y2], {y2, 0, y3}, Assumptions -> y3 > 0]

pdfSum[4] = Integrate[pdfSum[3] pdf[4, y4 - y3], {y3, 0, y4}, Assumptions -> y4 > 0]

pdfSum[5] = Integrate[pdfSum[4] pdf[5, y5 - y4], {y4, 0, y5}, Assumptions -> y5 > 0]

pdfSum[6] = Integrate[pdfSum[5] pdf[6, y6 - y5], {y5, 0, y6}, Assumptions -> y6 > 0]

pdfSum[7] = Integrate[pdfSum[6] pdf[7, y7 - y6], {y6, 0, y7}, Assumptions -> y7 > 0]

pdfSum[8] = Integrate[pdfSum[7] pdf[8, y8 - y7], {y7, 0, y8}, Assumptions -> y8 > 0]

We see the pattern and the pdf for a general $n$ is

$$\frac{\Gamma \left(\frac{n+1}{2}\right) \exp \left(\frac{1}{2} (-(n-1)) z\right) (\exp (z)-1)^{\frac{n-2}{2}}}{\sqrt{\pi } \Gamma \left(\frac{n}{2}\right)}$$

So the pdf for $S_n$ is that of the above but including the shift of $\log(n)$:

pdfSn[n_] := Gamma[(1 + n)/2]/(Sqrt[\[Pi]] Gamma[n/2])*

Exp[-(n - 1) (z + Log[n])/2] (-1 + Exp[z + Log[n]])^((n - 2)/2)

Taking the limit of this function we have

pdfS = Limit[pdfSn[n], n -> \[Infinity], Assumptions -> z \[Element] Reals]

$$\frac{e^{-\frac{z}{2}-\frac{e^{-z}}{2}}}{\sqrt{2 \pi }}$$

The moment generating function associated with this pdf is

mgf = Integrate[Exp[\[Lambda] z] pdfS, {z, -\[Infinity], \[Infinity]},

Assumptions -> Re[\[Lambda]] < 1/2]

$$\frac{2^{-\lambda } \Gamma \left(\frac{1}{2}-\lambda \right)}{\sqrt{\pi }}$$

This doesn't look exactly like the mgf in the OP's question when $\sigma=1/2$ but Mathematica declares them identical with

Gamma[1 - \[Lambda]/(1/2)]/((1/2)^\[Lambda] Gamma[1 - \[Lambda]]) // FunctionExpand

$$\frac{2^{-\lambda } \Gamma \left(\frac{1}{2}-\lambda \right)}{\sqrt{\pi }}$$

Another check involves extracting several moments which gives identical results. Here is for the first moment:

D[mgf, {\[Lambda], 1}] /. \[Lambda] -> 0 // FunctionExpand // FullSimplify

D[Gamma[1 - \[Lambda]/(1/2)]/((1/2)^\[Lambda] Gamma[1 - \[Lambda]]), {\[Lambda], 1}] /. \[Lambda] -> 0

Both give $\gamma +\log (2)$ (where $\gamma$ is Euler's constant).

For the 17th moment:

D[mgf, {\[Lambda], 17}] /. \[Lambda] -> 0 // N

D[Gamma[1 - \[Lambda]/(1/2)]/((1/2)^\[Lambda] Gamma[1 - \[Lambda]]), {\[Lambda], 17}] /. \[Lambda] -> 0 // N

Both give 3.71979*10^19.

There might be some simple way to insert $\sigma$ into the limiting pdf but I haven't played with that yet.