If you intend to use your model to predict normal/abnormal status in a new set of patients, you might not have to do anything about perfect separation or multicollinearity.

Say that you had one variable that perfectly predicted normal/abnormal status. A model based on that predictor would show perfect separation, but wouldn't you still want to use it?

Your perfect separation, however, might come from the large number of predictor variables, which might make perfect separation almost unavoidable. Then, even if no particular variables are perfectly related to disease state, you will have problems in numerical convergence of your model, and the particular combinations of variables that predict perfectly in this data set might not apply well to a new one. In that case, this page provides concise help on how to proceed. Also, this question and answer by @hxd1011 shows that ridge regression (under the name of "L2 regularization" on that page) can solve the problem of perfect separation.

This page is a good introduction to multicollinearity in the logistic regression context. Multicollinearity poses problems in getting precise estimates of the coefficients corresponding to particular variables. With a set of collinear variables all related to disease status, it's hard to know exactly how much credit each of them should get individually. If you don't care about how much credit to give to each, however, they can work very well together for prediction. In general, you typically lose in predictions if you throw away predictors, even predictors that don't meet individual tests of "statistical significance." Ridge regression tends to treat (and penalize) sets of correlated variables together, providing a principled approach to multicollinearity.

Ridge regression (as provided for example by the glmnet package in R) thus could solve both the perfect-separation and the multicollinearity problems, particularly if your interest is in prediction. This answer shows an example of using glmnet functions for logistic regression. Its example is for LASSO, but you simple set the parameter value alpha=0 for ridge instead. As another example, ISLR starting on page 251 has a worked through case of ridge regression for a standard linear model; specify the parameter family="binomial" instead for logistic regression.

In my experience, however, this type of model in clinical science sometimes isn't used for predicting new cases, but rather to try to argue that certain variables are the ones most closely related to disease status in general. I think that many of the comments on your question were getting at that possibility. The temptation is that the variables included in the "best model" for explaining the present data are then taken to be the most important in general. That can be a dangerous interpretation. Follow the feature-selection tag on this site for extensive discussion.

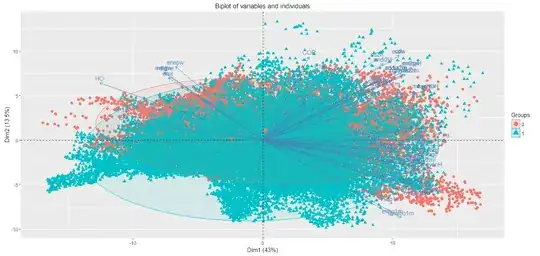

In an update to your question, you show that the first 2 principal components of your predictor matrix do not separate the 2 groups. That's not so surprising, as these are only the first 2 dimensions of a 60-dimension space, and it's hard to know exactly where among those dimensions the perfect separation arises. I don't think that your PCA helps here at all for variable selection. Ridge regression is the best way to try to proceed. Be warned, however, that if you are looking for p-values you do not get them directly from ridge regression. If p-values are important to you, repeat the process on multiple bootstrap samples of the data.