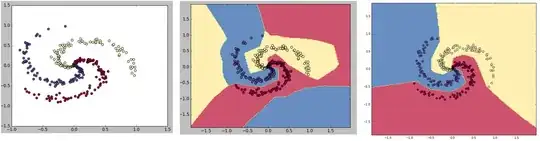

The reason is that you are NOT asking model to provide "a desired boundary", BUT simply ask the model to correctly classify your data.

There are infinite decision boundaries exist, that achieve same classification task with same accuracy.

When we use neural network, the model can chose whatever it wants. In addition, the model does not know the shape of the data (the groundtruth / generative model / spiral shape in your example). The model will just select one "working" decision boundary, but not "really optimal to the true distribution" (as indicated in your figure 3)

If you want to do something with decision boundary, please check support vector machine. In fact, even you use SVM, decision boundary may not be what you expected, because it will max the "margin", but still have no idea about true distribution (spiral or other shape).

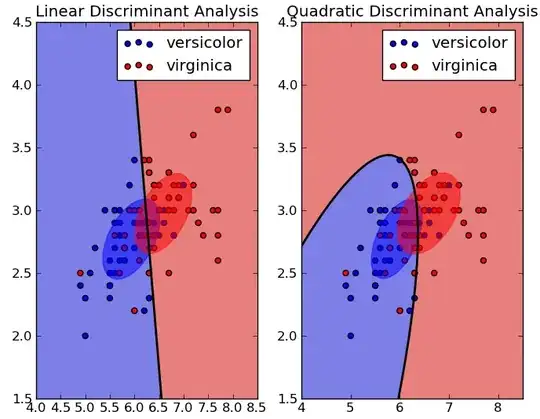

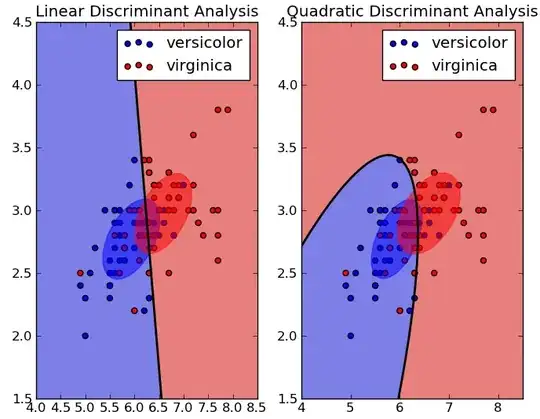

As mentioned in the comment, different types of the model have different decision boundaries. For example, logistic regression and linear discriminant analysis (LDA) will have a line (or hyperplane in high dimensional space), and quadratic discriminant analysis (QDA) will have a quadratic curve as division boundary.

pic source

Finally, My answer for another question gives some examples on the different model's decision boundaries.

Do all machine learning algorithms separate data linearly?