Given a hierarchical model $p(x|\phi,\theta)$, I want a two stage process to fit the model. First, fix a handful of hyperparameters $\theta$, and then do Bayesian inference on the rest of the parameters $\phi$. For fixing the hyperparameters I am considering two options.

- Use Empirical Bayes (EB) and maximize the marginal likelihood $p(\mbox{all data}|\theta)$ (integrating out the rest of the model which contains high dimensional parameters).

- Use Cross Validation (CV) techniques such as $k$-fold cross validation to choose $\theta$ that maximizes the likelihood $p(\mbox{test data}|\mbox{training data}, \theta)$.

The advantage of EB is that I can use all data at once, while for CV I need to (potentially) compute the model likelihood multiple times and search for $\theta$. The performance of EB and CV are comparable in many cases (*), and often EB is faster to estimate.

Question: Is there a theoretical foundation that links the two (say, EB and CV are the same in the limit of large data)? Or links EB to some generalizability criterion such as empirical risk? Can someone point to a good reference material?

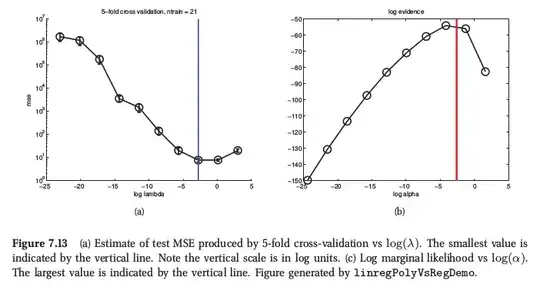

(*) As an illustration, here is a figure from Murphy's Machine Learning, Section 7.6.4, where he says that for ridge regression both procedures yield very similar result:

Murphy also says that the principle practical advantage of the empirical Bayes (he calls it "evidence procedure") over CV is when $\theta$ consists of many hyper-parameters (e.g. separate penalty for each feature, like in automatic relevancy determination or ARD). There it is not possible to use CV at all.