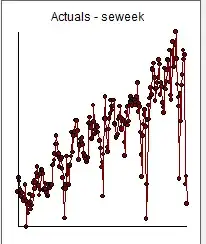

I use R and the auto.arima function for time series analysis. My data is here. Some are nonstationary, and with an apparent seasonal component. Plots of the data show trend and seasonality.

The code snippet:

library(tseries)

library(forecast)

t <- read.csv("tovar_moving_w.csv",header=TRUE, sep=";",dec=",")

tts<-ts(t$qty,start=1, frequency=52)

FR = auto.arima(tts, stepwise=FALSE, seasonal=TRUE,approximation=FALSE,trace=TRUE)

fcast_l=forecast(FR,h=90,level = c(0.9,0.8,0.7))

plot(fcast_l,type="l", col="dark red", xlab="Week",

main="Value")

summary(FR)

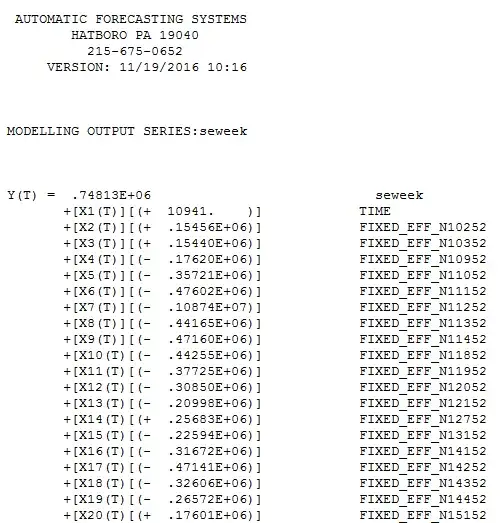

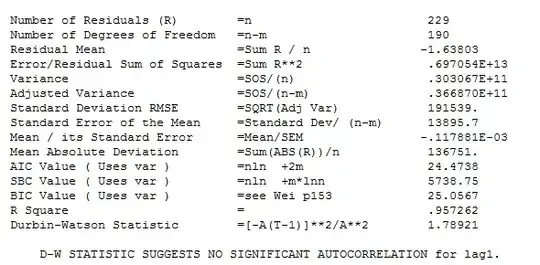

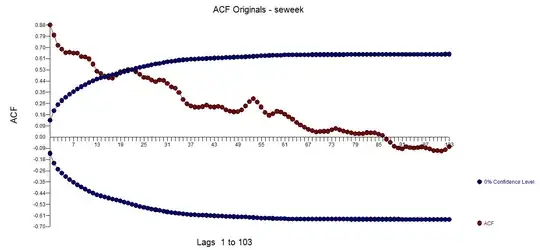

auto.arima chooses the best model ARIMA(2,1,2)(1,0,0)[52], AIC = 6541.54.

The function does not see seasonality.

If you set the D = 1

FR = auto.arima(tts, D=1, stepwise=FALSE, seasonal=TRUE,approximation=FALSE,trace=TRUE)

then the best model is ARIMA(0,0,2)(1,1,1)[52], AIC = 5128.24.

AIC of the second model is less than that of the first. And the forecasts of the second model are essentially correct.

Why does auto.arima not see the season? Is this an error of the OCSB test?

![enter image description here] [3]](../../images/3794537535.webp) with plot here

with plot here