I do not think that you can go more intuitive about it then saying once again what it does: it returns $1$ for something that interests you, and $0$ for all the other cases.

So if you want to count blue-eyed people, you can use indicator function that returns ones for each blue-eyed person and zero otherwise, and sum the outcomes of the function.

As about probability defined in terms of expectation and indicator function: if you divide the count (or sum of ones) by total number of cases, you get probability. Peter Whittle in his books Probability and Probability via Expectation writes a lot about defining probability like this and even considers such usage of expected value and indicator function as one of the most basic aspects of probability theory.

As about your question in the comment

isn't the Random Variable there to serve the same purpose? Like $H=1$

and $T=0$?

Well, yes it is! In fact, in statistics we use indicator function to create new random variables, e.g. imagine that you have normally distributed random variable $X$, then you may create new random variable using indicator function, say

$$ I_{2<X<3} = \begin{cases} 1 & \text{if} \quad 2 < X < 3 \\

0 & \text{otherwise} \end{cases} $$

or you may create new random variable using two Bernoulli distributed random variables $A,B$:

$$ I_{A\ne B} = \begin{cases} 0 & \text{if } & A=B, \\

1 & \text{if } & A \ne B

\end{cases} $$

...of course, you could use as well any other function to create new random variable. Indicator function is helpful if you want to focus on some specific event and signalize when it happens.

For a physical indicator function imagine that you marked one of the walls of six-sided dice using red paint, so you can now count red and non-red outcomes. It is not less random them the dice itself, while it's a new random variable that defines outcomes differently.

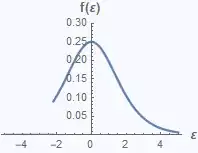

You may also be interested in reading about Dirac delta that is used in probability and statistics like a continuous counterpart to indicator function.

See also: Why 0 for failure and 1 for success in a Bernoulli distribution?